The EU AI Act is beginning to take force: How should organisations respond?

Find out which AI use cases pose a "unacceptable risk" and are now prohibited on the Continent as the controversial Act starts to bite.

The EU's controversial Artificial Intelligence Act (AI Act) has partially come into force, putting new pressures on companies across the Continent.

On Sunday (February 2nd 2025), Chapters I and II of the EU AI Act began to take effect, introducing AI literacy requirements and the prohibition of a "very limited number" of use cases that "pose unacceptable risks in the EU".

"To facilitate innovation in AI, the Commission will publish guidelines on AI system definition. This aims to assist the industry in determining whether a software system constitutes an AI system," the European Commission wrote.

"The Commission will also release a living repository of AI literacy practices gathered from AI systems' providers and deployers. This will encourage learning and exchange among them while ensuring that users develop the necessary skills and understanding to effectively use AI technologies.

"To help ensure compliance with the AI Act, the Commission will publish guidelines on the prohibited AI practices that are posing unacceptable risks to citizens' safety and fundamental rights."

Which AI systems are prohibited by the EU's AI Act?

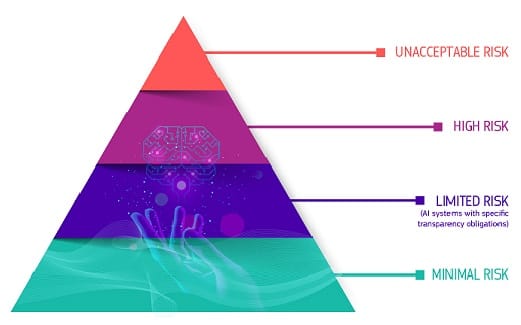

The AI Act defines four levels of risk for AI systems, which you can see in the graphic above. It bans eight practices which are "considered a clear threat to the safety, livelihoods and rights of people". These "unacceptable risks" are:

- Harmful AI-based manipulation and deception: Subliminal, manipulative and deceptive systems that materially distort behaviour, causing or likely to cause significant harm.

- AI-based exploitation of vulnerabilities: Systems that exploit individuals’ vulnerabilities due to age, disability, or social and economic circumstances, distorting behaviour and causing or likely to cause significant harm.

- Social scoring: Evaluating individuals based on social behaviour or personality over time, leading to unjustified, disproportionate, or contextually irrelevant detrimental treatment.

- Individual criminal offence risk assessment or prediction: This Minority Report-style prohibition bans AI systems predicting an individual’s likelihood of committing a future crime based on personality or profiling.

- Facial recognition databases: Untargeted scraping of the internet or CCTV material to create or expand facial recognition databases

- Emotion recognition: AI systems inferring emotions in workplace or educational environments.

- Biometric categorisation: Assigning individuals to categories based on biometric data to infer protected characteristics such as ethnicity, sexual orientation or religion.

- Facial recognition for crime prevention: A ban on real-time remote biometric identification for law enforcement purposes in publicly accessible spaces - with a few exceptions.

What is AI literacy?

This requirement is set out in Chapter 1, Article 4 of the Act. Michael Curry, president of data modernisation at Rocket Software – a specialist in AI and IT modernisation – told Machine: “The EU AI Act mandates compulsory AI literacy for any business that either uses or develops AI systems. This regulation is focused on awareness and education, as it requires businesses to take ownership of the upskilling of their own workforce and create an educational structure internally that will equip their employees with the necessary skills to use these systems responsibly and ethically.

“This will be a crucial element of compliance going forward, but it’s also a necessary one: workforces that lack the knowledge to operate their AI-powered systems will never see the true return on investment on their innovations, and moreover, the abuse of AI carries the potential to do huge damage to any company. From data leaks to AI hallucinations, organisations need to take the AI literacy training of their employees just as seriously as they would if they were operating heavy equipment.

"Any organisation that uses AI for HR or personal evaluation purposes needs to do a thorough audit of their processes to ensure they remain compliant. To achieve this, businesses need to adapt robust privacy policies surrounding their AI systems, as well as access controls to protect sensitive data. Now is the time to set up comprehensive monitoring systems that ensure the AI used continues to adhere to these regulations and any potential breach is immediately flagged, so organisations can manage risks accordingly.”

What is a high-risk use case under the EU AI Act?

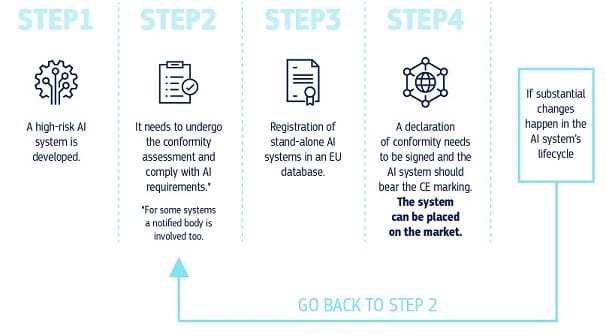

When the rest of the Act comes into force, high-risk AI systems must meet strict requirements before deployment. This will include thorough risk assessment, high-quality datasets to prevent bias, and activity logging for traceability. They must have detailed documentation for regulatory review, clear information for deployers, and appropriate human oversight. Additionally, they must ensure robustness, cybersecurity, and accuracy.

These high risk use cases are those that pose serious risks to health, safety, or fundamental rights. These include AI components in critical infrastructure, such as transport systems, where failures could endanger lives. AI applications in education that influence access to learning and career prospects, such as exam-scoring systems, also fall into this category. Similarly, AI-driven safety components in medical devices, like robot-assisted surgery, are considered high-risk.

Employment-related AI tools, including CV-sorting software used in recruitment, are also classified as high-risk due to their impact on job opportunities. AI systems that determine access to essential services, such as credit scoring for loans, are included as well. Furthermore, AI used for biometric identification, emotion recognition, and categorisation - such as systems that retrospectively identify shoplifters- raises concerns about privacy and surveillance.

Law enforcement applications, such as AI tools assessing the reliability of evidence, and AI systems used in migration, asylum, and border control, are deemed high-risk due to their potential to infringe on fundamental rights. AI in judicial and democratic processes, including tools for preparing court rulings, is also subject to strict regulation. Find out more by reading Chapter III of the Act.

Will the EU AI Act hamper innovation and competitiveness?

Who did this? pic.twitter.com/s4YGaDubvr

— Michael A. Arouet (@MichaelAArouet) April 6, 2024

Europe is competing on a world stage that includes competitors such as the low-touch or even no-touch US and China. China’s regulations are stringent but focused on state control, and the US is likely to adopt a more flexible, sector-specific approach. Both approaches stand in stark contrast to the more interventionist European approach.

Although many industry insiders fear that the EU is tying its own hands and will lose ground to competitors with a lighter regulatory touch, Diyan Bogdanov, Director of Engineering Intelligence & Growth at Payhawk, argues that it could actually position Europe as a world leader.

"The EU AI Act isn't just another compliance burden - it's a framework for building better AI systems, particularly in financial services," he said. "By classifying finance applications like credit scoring and insurance pricing as 'high-risk' the Act acknowledges what we've long believed: when it comes to financial services, AI systems must be purposeful, precise, and transparent.

"We're already seeing this play out in the market. While some chase the allure of general-purpose AI, leading financial companies are embracing what we call "right-sized" AI, focusing on ‘targeted automation’ through AI agents and/or the deployment of smaller-scale models — all within robust governance frameworks.

"Europe is setting the global standard for how AI should work in financial services - and it's exactly what the industry needs. While the US and China compete to build the biggest AI models, Europe is showing leadership in building the most trustworthy ones. The EU AI Act's requirements around bias detection, regular risk assessments, and human oversight aren't limiting innovation — they're defining what good looks like in financial services AI.

"This regulatory framework gives European companies a significant advantage. As global markets increasingly demand transparent, accountable AI systems, Europe's approach will likely become the de facto standard for financial services worldwide."

Eduardo Crespo, VP EMEA at PagerDuty, said: “The recent news cycles around DeepSeek AI showcased the highly competitive and rapidly evolving nature of AI technology, with many predicting LLM models will be commoditised come 2026. The EU AI Act roll is timely as it brings two areas into focus – technical management and people strategy. AI providers need to run tight controls in terms of functionality.

"Regulation mandates against tools employing “subliminal techniques beyond a person’s consciousness or purposefully manipulative or deceptive techniques”. Providers need to ensure there is no room within products for features or bugs that could cause harm.

“With the implementation passed, fines up to 7% of a company's annual turnover can be levied for non-compliance. From a people perspective, companies need to demonstrate real policy development around AI learning, promoting technical knowledge through experience and education, to minimise risk. These are mandates that cannot be ignored.

"As the EU AI Act shifts into gear, it’s vital to get legal frameworks and governance aligned before any serious AI investment is considered.”

Preparing to comply with the EU AI Act

Sheldon Lachambre, Director of Engineering at DoiT, warned that businesses must remember the slipups made during cloud adoption and try to avoid any mistakes with the new legislation.

"The EU AI Act underscores the critical importance of thoughtful and effective AI adoption. As these regulations take shape, the need for businesses to adopt AI tactically and responsibly has never been more apparent," Lachambre said.

“With this in mind, businesses must learn from the mistakes of rapid cloud adoption and approach AI with caution and a strategic mindset. As cloud transformation requires meticulous planning and cost management, so does AI implementation as well.

“To navigate the changing landscape, business leaders should look to FinOps — a framework and culture that, at its core, prioritises data-driven decision making, financial accountability, and cross-team collaboration. This will enable organisations to manage escalating costs, measure ROI, and allocate resources efficiently, ensuring AI integration drives meaningful, sustainable and efficient outcomes.”

Steve Lester, CTO at business services consultancy Paragon, highlighted the importance of ethical AI practices, transparency, and compliance.

"For all businesses that operate on the EU market, transparency and ethical AI practices will be absolutely key. In customer communications, this means going beyond simply disclosing AI use. Companies need to ensure their targeting and personalisation strategies completely avoid newly prohibited practices like manipulative AI systems, social scoring, or biometric categorisation.

"Companies will need to conduct thorough audits of their AI systems, focusing on identifying and eliminating practices banned under the Act's initial chapters. Investing in staff training on AI ethics is also crucial, with businesses needing to create flexible governance frameworks that can quickly adapt to new regulatory requirements.

"It's all about maintaining trust with EU customers, proving that responsible AI development can work hand in hand with robust risk management, compliance, and ethical practices – especially as the first wave of the EU AI Act's provisions start to come into sharp focus."

Have you got a story or insights to share? Get in touch and let us know.