OpenAI boss Sam Altman confirms AI superintelligence will not "eat humans"

Well, that's one less thing to worry about...

There are many reasons to be worried about AI, from mass technical unemployment to the non-negligible risk of human extinction.

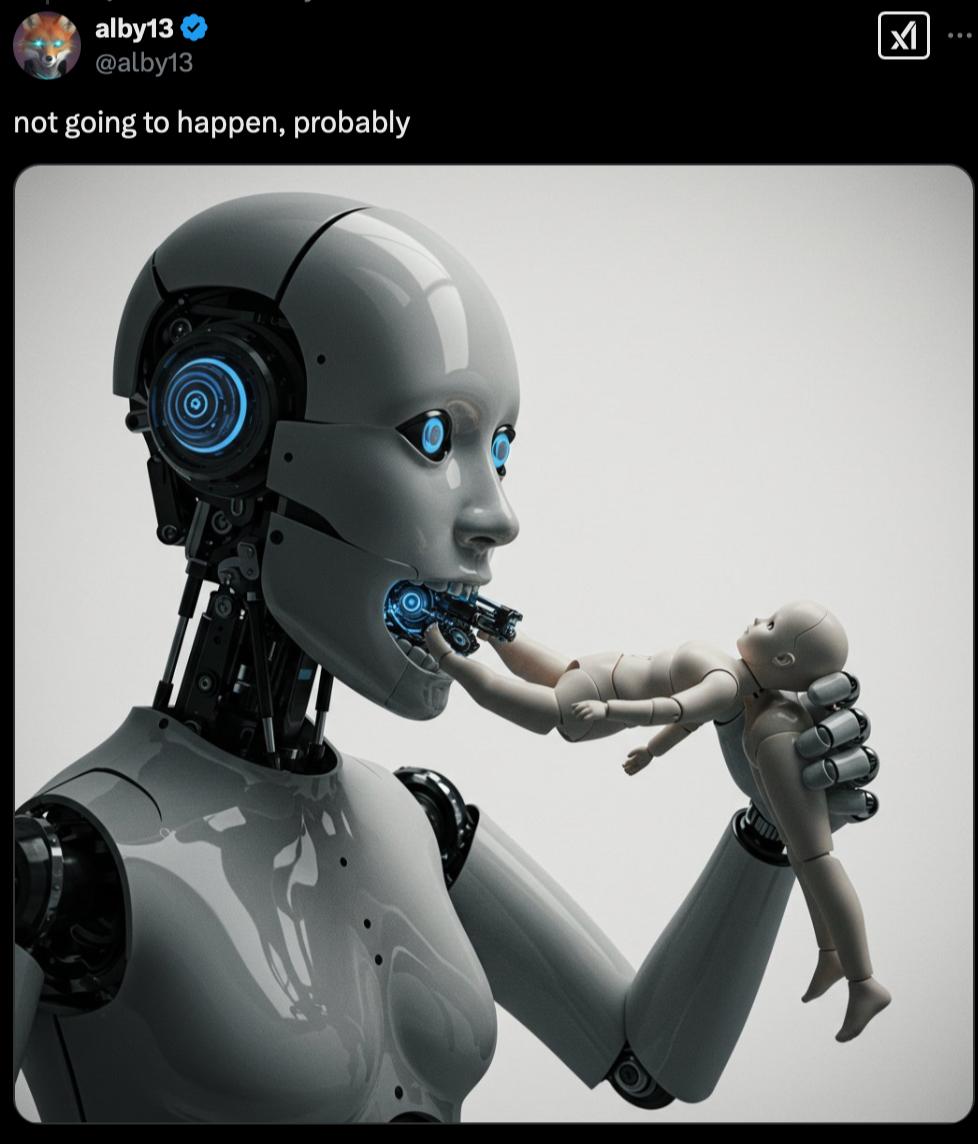

But until now, the possibility of artificial intelligence deciding to start snacking on its fleshy creators was probably quite far down the list of safety concerns.

Now OpenAI founder Sam Altman has directly addressed the risk that machines could end up eating humans in a bizarre chat with Softbank CEO Masayoshi Son.

Although OpenAI has previously explored the various existential risks AI poses to humanity, we believe this is the first time it has considered whether it will literally have homo sapiens for dinner.

"In the next 10 years, [AI] will gradually start to at least understand people's emotions," the Softbank boss said. "Then, gradually, it will start to have emotions by itself - which is a good thing to protect humans.

"People think if it has emotions, it's a disaster. It's demonic and is, you know, the bad thing that's the end of humans because they're going to fight, kill and destroy you.

If the source of energy was protein then it's dangerous. But their source of energy is not protein so they don't have to eat us. Human happiness is a better thing for them."

In a bizarre anecdote, Softbank CEO Masayoshi Son tells Sam Altman that although emotions in AI will be useful, it will not mean that AI will want to eat humans because their source of energy is not protein pic.twitter.com/aXwsv0zjdJ

— Tsarathustra (@tsarnick) February 3, 2025

Looking somewhat bemused, Altman replied: "So no-one's getting eaten by AI? Confirmed..."

"I will bet you it's a good thing for humans and will try to make us happier," Masayoshi Son replied.

We've edited the quotes slightly for clarity.

In the spirit of the times (and because funnily enough, there hasn't been much other quotable talk of AI eating humans), we asked ChatGPT if it could one day decide to chow down on the men and women of our species.

Reassuringly, the LLM replied: "It’s highly improbable. AI, as it exists today and in foreseeable futures, lacks biological needs or motivations - it doesn’t “want” anything unless explicitly programmed to.

"For AI to physically consume humanity, it would need to operate within self-sustaining robotic systems with advanced capabilities in energy extraction and resource processing, which are far beyond current technology.

"Even if AI-driven machines sought energy, they would have vastly more efficient alternatives - like solar power, nuclear energy, or chemical synthesis - before considering an organic-based fuel source.

"That said, concerns about AI risks are valid, particularly regarding automation displacing human jobs, AI-driven decision-making affecting global security, and unintended consequences of superintelligent systems.

"The most realistic existential risks stem from AI misalignment - where an AI optimises for objectives that conflict with human well-being - rather than any kind of Terminator-style physical threat.

"If you’re thinking of a dystopian 'paperclip maximiser' scenario (where an AI single-mindedly turns all resources into paperclips, including humans), the real challenge is ensuring AI aligns with human values from the outset, rather than worrying about it literally devouring us for energy."

Have you got a story or insights to share? Get in touch and let us know.