Weapons of mass deception: Russia and China's "digital arsenals" exposed

EU investigation uncovers "foreign information manipulation and interference" campaigns conducted globally.

The European Union has warned that "malign actors" from autocratic nations are conducting online psyop and propaganda campaigns on an industrial scale.

Both China and Russia have trained their "massive digital arsenals" on the West and other regions in a bid to destabilise society, sabotage the mechanisms of democracy and sow discord among allies or within the populations of sovereign nations.

Investigators from the European External Action Service, an EU diplomatic service, found more than 80 countries and 200 organisations were hit by foreign information manipulation and interference (FIMI) campaigns in 2024.

Kaja Kallas, EU High Representative for Foreign Affairs and Security Policy Vice-President of the European Commission, warned: "Our information space has become a geopolitical battleground."

She added: "Foreign actors use FIMI to manipulate public opinion, fuel polarisation, and interfere with democratic processes. The aim is to destabilise our societies, damage our democracies, drive wedges between us and our partners and undermine the EU’s global standing.

"FIMI is not merely a tool for disseminating deceptive narratives. It is an integral part of military operations used by foreign states to lay the way for kinetic action on the ground."

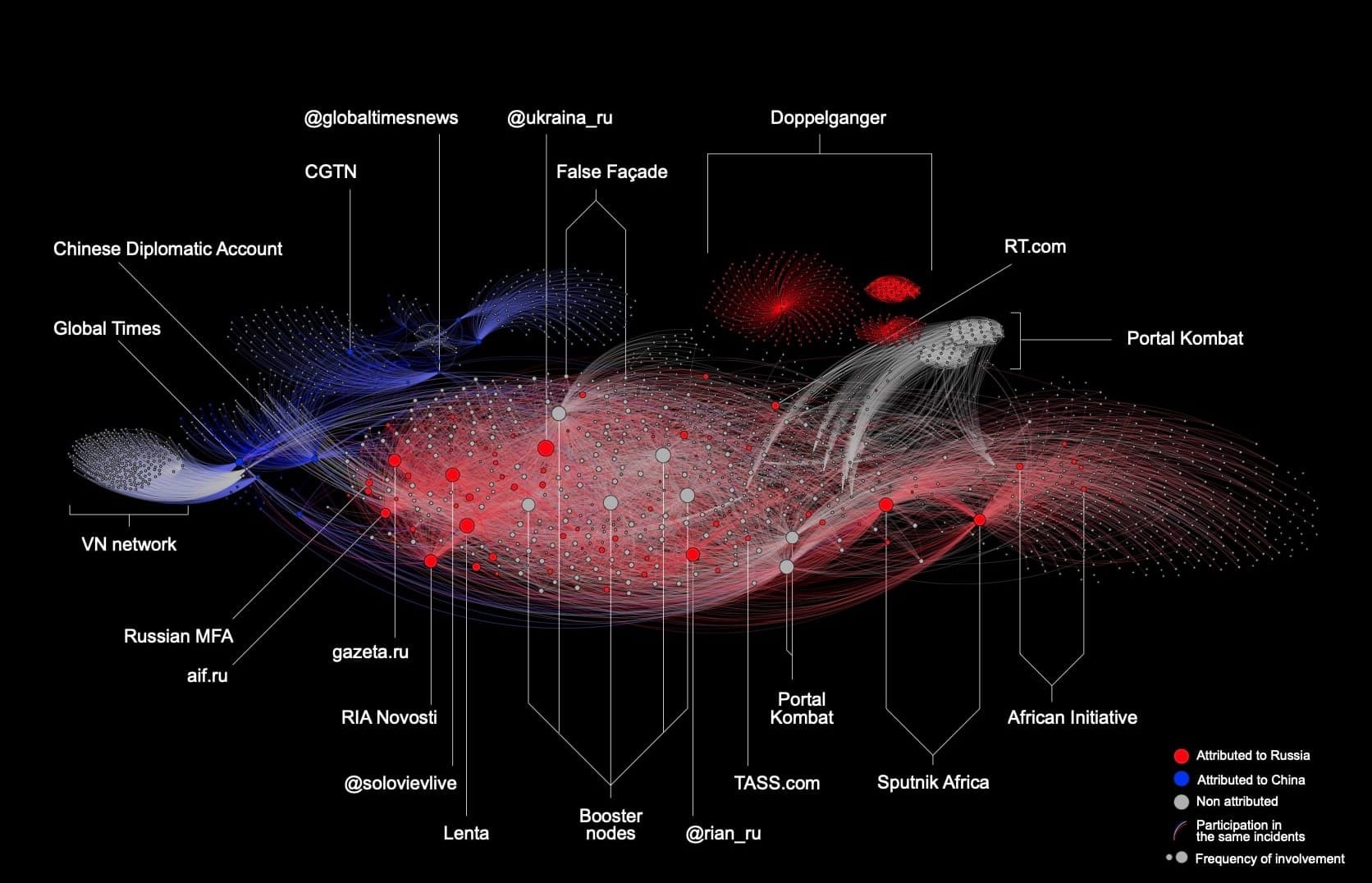

The study analysed samples of 505 FIMI incidents conducted in 2024 involving more than 38,000 channels, revealing a "vast online infrastructure" of deceit.

Ukraine has been the main target of FIMI attacks, accounting for half of the recorded incidents. These attacks aimed to weaken resistance to Russia's invasion.

Elsewhere, Russia sought to undermine support for the Ukrainian war.

France was hit by 152 FIMI campaigns, with the Paris Olympic and Paralympic Games and legislative elections among the main targets.

Germany also faced 73 attacks, focused on political events, international visits and episodes of unrest such as farmers’ protests.

Moldova and Sub-Saharan Africa were also "heavily" bombarded, with other Nato nations also facing FIMI assaults.

READ MORE: US Navy creates school shooter "incapacitation system"

During a year in which more than half of the world’s voting population went to the polls, elections came under attack. At least 42 Russian FIMI campaigns took place during June's European Elections.

X allegedly accounted for 88% of the detected activity. Key tactics, techniques and procedures (TTPs) included bot networks and coordinated inauthentic behaviour, as well as the impersonation and creation of inauthentic news websites.

GenAI is proving to be a force multiplier, enabling digital propagandists to automatically generate highly convincing content to achieve their geopolitical goals.

Most FIMI cases used basic tactics like posting texts or images, with many campaigns (349) tailoring content to local audiences using cultural cues and language, sometimes via targeted ads.

Bot networks made up 73% of FIMI channels, boosting content visibility while remaining hard to trace. Impersonation of media outlets (124 cases), organisations (127) and public figures (13) was common, aiming to exploit trust to achieve shadowy political goals. There were 41 cases involving deepfakes or automated distribution, though the overall impact of this tech remains unclear.

Inside Russia and China's FIMI campaigns

Russia "perceives the information space as a warfighting domain", the EU warned.

"Using a combination of state and non-state actors, Russia has developed a multi-layered strategy and has sought to shape global narratives to advance Moscow’s geopolitical objectives," it wrote. "By focusing on exerting long-term influence rather than isolated incidents, Russia continues to exploit weaknesses in the global information landscape, making its FIMI tactics a serious security concern for the European Union."

Russia uses a mix of state-backed media, proxies, and digital tactics, blending disinformation, impersonation, and narrative engineering.

Key tools include censorship at home, global propaganda arms like state-backed media organisations and covert tactics including fake fact-checking or social media flooding. Messaging is hyper-localised to exploit societal rifts, fueling anti-West, anti-immigrant, and culture war sentiments, while its diplomatic channels double as propaganda amplifiers.

In 2024, China ramped up global influence operations, targeting Taiwanese and US elections while using state media and covert networks to push pro-Beijing narratives - especially in Africa and the South China Sea. It blends official channels with influencers, PR firms, and partnerships to mask state ties and boost credibility.

READ MORE: Europe facing AI “polycrisis” as superintelligence “looms on the horizon”

Suppression is a key tactic, extending to diaspora intimidation and legal threats. These repressive methods often accompany China’s economic and cyber influence. Narratives focus on defending China’s global image, attacking Western hypocrisy, and justifying policies in sensitive regions like Xinjiang and Taiwan. Coordination with Russia is growing but remains tactical.

"China’s strong efforts to defend its international image continue in 2024, especially regarding human rights, the South China Sea, and areas it deems exclusively domestic, such as Xinjiang, Tibet, Hong Kong and Taiwan," the EU wrote.

"At the same time, Chinese actors regularly leverage international conflicts (e.g. Russia’s war of aggression against Ukraine, Gaza) as vectors for projecting China’s positive role in the global arena, often pairing it with offensive narratives targeting 'the West' as hypocritical and inefficient vis-à-vis the Chinese model of international engagement."

It added: "Over the coming years, we expect China will continue expanding its reach and set of FIMI TTPs, leveraging vectors at both state and sub-national level, enhancing the public-private partnerships, experimenting more with emerging technology, and exporting more domestic practices to help suppress unfavourable information."

FIMI and loathing in cyberspace: Propaganda groups in focus

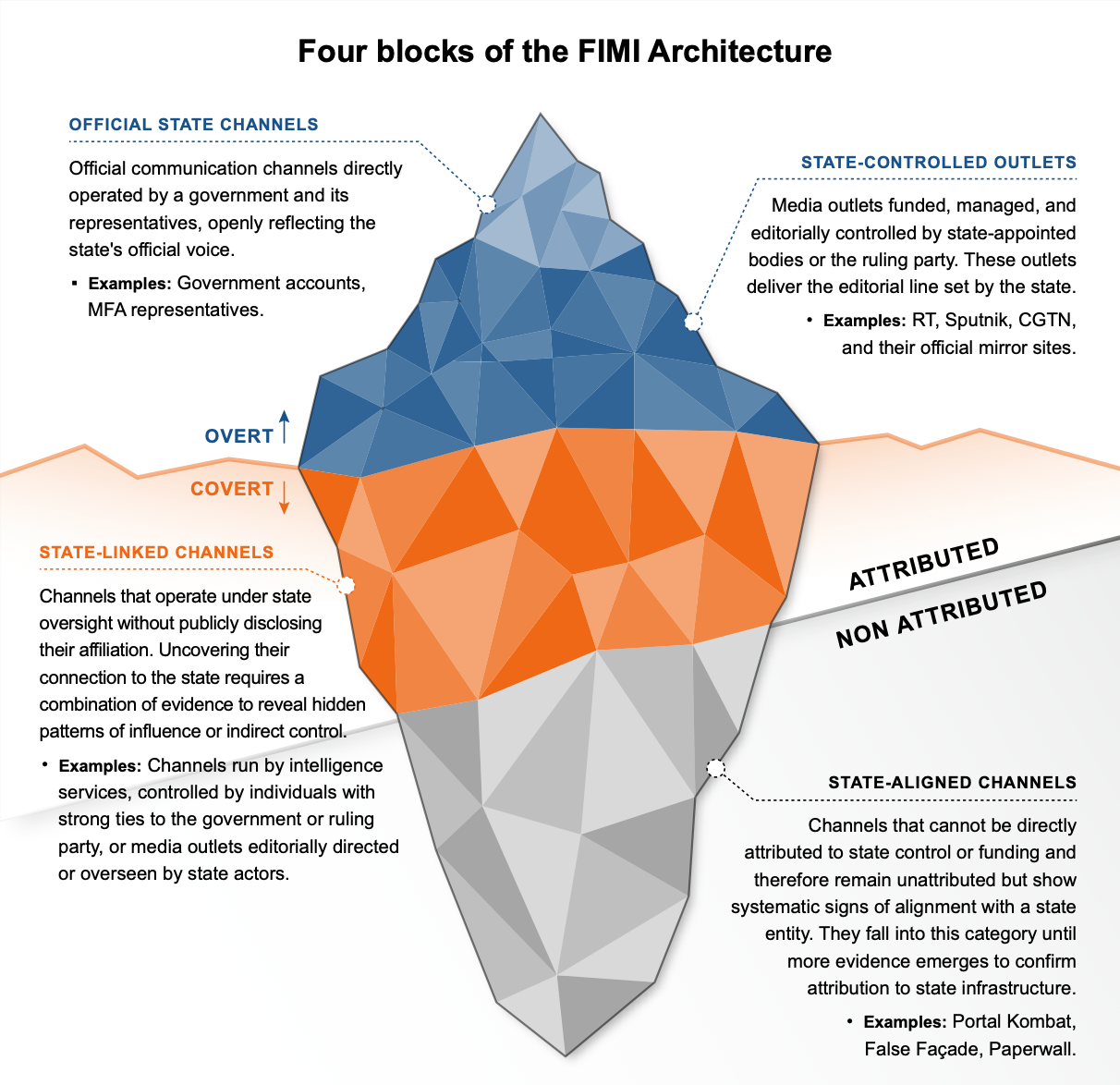

The report introduced a new analytical tool called the FIMI Exposure Matrix. It found that Russia dominates the landscape, accounting for 20% of activity. China was linked to 3.5% of FIMI campaigns, with the rest (76.5%) consisting of non-attributed yet state-aligned channels.

Russia’s FIMI structure is decentralised and made up of official outlets, covert proxies and grey-zone actors such as blogs or influencers. China, by contrast, runs a more centralised and synchronised network, using state media, diplomatic accounts and covert PR assets to reinforce narratives on sensitive domestic issues and reshape international perceptions.

Core FIMI nodes fall into three roles: content generators, boosters and bridges linking different regions and platforms. The system is multi-platform and highly adaptable, using Telegram, X, Meta, YouTube, Quora, and even Bluesky. Content is reshaped into videos, memes, or AI-generated formats and then "laundered" to obscure its origins and increase legitimacy.

Russian and Chinese operations sometimes cross-amplify, especially around anti-Western themes, though this alignment is typically opportunistic. Patterns such as coordinated activation, content recycling, and targeted localisation allow narratives to penetrate deeply into regional echo chambers.

Here are some examples of FIMI actors.

- Doppelgänger: A Russian FIMI campaign using 25,000 fake accounts and 228 domains across nine languages to impersonate Western media and spread pro-Kremlin disinfo. Run by state-linked firms, it drives traffic through Meta ads, manipulates algorithms, and adapts quickly to takedowns via re-registration and backup domains. Its self-contained structure avoids direct ties to official Russian channels.

- False Façade: Also known as Storm-1516 or CopyCop, this group operates 230 fake news sites mimicking Western outlets, using AI translations and staged videos to launder pro-Russian content back into state media. It targets Western leaders and elections, relying on pre-made backup sites and influencers for resilience and amplification.

- Portal Kombat: This organisation runs 200 fake media sites in 35 languages, mainly republishing Russian propaganda with a focus on Africa and localised content. Using automation and low-cost saturation tactics, it seeks visibility in regional info-spaces. It amplifies both African Initiative and, occasionally, False Façade, and recently began expanding on X.

- African Initiative: Russia’s primary FIMI hub in Africa, with 16 multilingual sites pushing Kremlin-aligned narratives through local networks, often recycled by Russian state media and boosted by Portal Kombat. It acts as a strategic bridge between Russian and African disinfo clusters and has been sanctioned by the EU.

It is worth saying that this report is biased in favour of the EU. It is likely that Europe engages in digital campaigns of its own - which we would also like to cover.

Machine is looking to hear from China and Russia regarding the West's propaganda and cyberwarfare campaigns. Please contact us at the address below if this is something you can help with. We are - and will remain - scrupulously neutral in our reporting.

Have you got a story or insights to share? Get in touch and let us know.