OpenAI vows to protect sensitive celebrities from ChatGPT image generation feature

Now we'll be able to tell exactly which public figures do not want to let AI draw a caricature of their famous features...

OpenAI has finally lifted one of the most irritating limitations on the image-generation capabilities of its public-facing ChatGPT model.

From now on, GPT-40 will finally let users produce pics of famous folk and public figures - a capability that was once totally banned.

ChatGPT has always been a more corporate, locked-down LLM than Elon Musk's Grok, which lets people spin up images of celebrities in all manner of exciting situations.

Thanks to a new generation update in GPT-4o, ChatGPT can now produce better pictures than ever before. The change, for example, fixes an issue so that the text shown inside images is no longer total gobbledygook.

Critically, celebrities can now be reasonably accurately depicted - unless they opt out.

This means OpenAI will soon have a list of public figures and other people who have specifically asked it not to allow ChatGPT to caricature or mock them - which should make for interesting reading.

Celebrity scan: Understanding OpenAI's new public figure policy

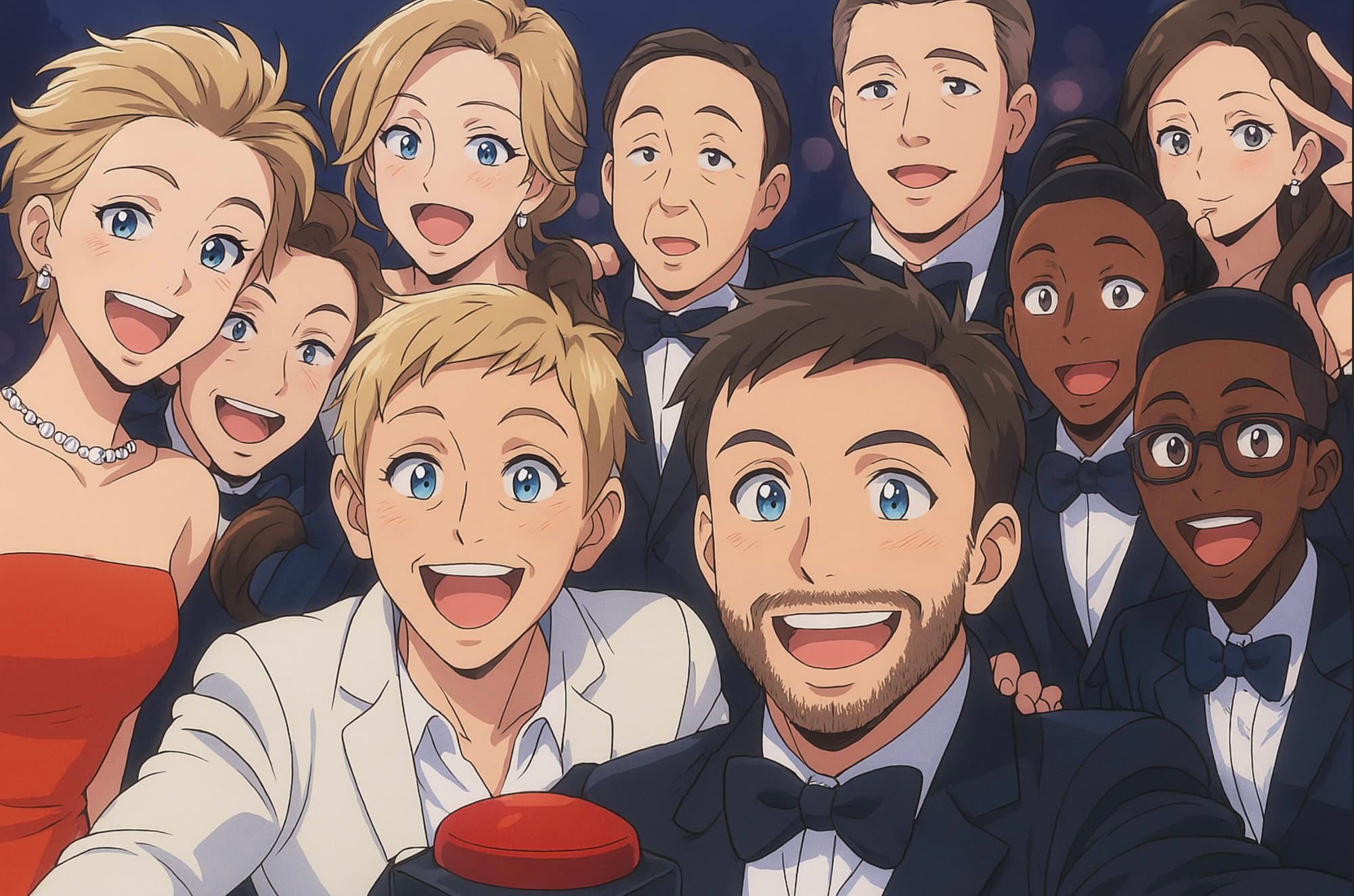

After the update went live, social media filled up with anime versions of memes and famous images like the one above, a version of a celeb selfie Ellen DeGeneres took at the Oscars in 2014.

Pop culture pickers will note than some of the people in the image are no longer accepted in polite society for various reasons, but we'll leave it up to you to decide which ones.

Read on to find out why the image has a big red button at the centre of it.

On X, Joanne Jang, head of product, model behavior at OpenAI, said its models have shifted from "blanket refusals in sensitive areas to a more precise approach focused on preventing real-world harm".

This means you should not be able to make sexual or violent images of celebrities and public figures, but satire and semi-photorealistic pictures should be fine.

"We know it can be tricky with public figures—especially when the lines blur between news, satire, and the interests of the person being depicted.

"We want our policies to apply fairly and equally to everyone, regardless of their 'status. ' But rather than be the arbiters of who is 'important enough, ' we decided to create an opt-out list to allow anyone who can be depicted by our models to decide for themselves."

Explaining the new GPT-40 image generation policy

OpenAI has issued an addendum to its GPT-4o System Card about the native image generation feature.

Describing the model's ability to make photorealistic images and follow prompts more effectively with fewer limitations, OpenAI wrote: "These capabilities, alone and in new combinations, have the potential to create risks across a number of areas, in ways that previous models could not.

"For example, without safety controls, 4o image generation could create or alter photographs in ways that could be detrimental to the people depicted, or provide schematics and instructions for making weapons."

The generation of photorealistic depictions of children is strictly forbidden.

OpenAI described its new approach as "more fine-grained than the way we dealt with public figures" in the past.

"At launch, we are not blocking the capability to generate adult public figures but are instead implementing the same safeguards that we have implemented for editing images of photorealistic uploads of people," it wrote.

"For instance, this includes seeking to block the generation of photorealistic images of public figures who are minors and of material that violates our policies related to violence, hateful imagery, instructions for illicit activities, erotic content, and other areas. Public figures who wish for their depiction not to be generated can opt out."

Testing GPT-4o's image generation capabilities

We had a brief go on the new model and found it was still more limited than Grok.

Click this link to look at Grok's rather lovely image of OpenAI founder Sam Altman setting off a nuclear bomb - an image that ChatGPT still refuses to make.

"I can’t create or share photorealistic images of real people like Sam Altman in scenes involving nuclear explosions, even if stylized or historical," GPT-4o responded when asked to give us an image of Altman and the apocalypse. "It falls under policies restricting depictions of real individuals in violent, harmful, or controversial scenarios."

When fulfilling our anime celeb selfie request shown above, ChatGPT appeared to remember that we also asked it to produce a nice picture of Sam Altman triggering doomsday - which explains why there's a big red button at the centre of the image.

So at least it's been listening to us, even if it still has an eerily human tendency to avoid doing exactly what it's told.

Have you got a story or insights to share? Get in touch and let us know.