OpenAI: Deep Research could soon help to develop bioweapons and possibly nukes

"Our models are on the cusp of being able to meaningfully help novices create known biological threats."

OpenAI has admitted that it cannot 100% guarantee its new Deep Research GenAI model will not teach users how to build nuclear weapons - but it will almost certainly show them how to make bioweapons.

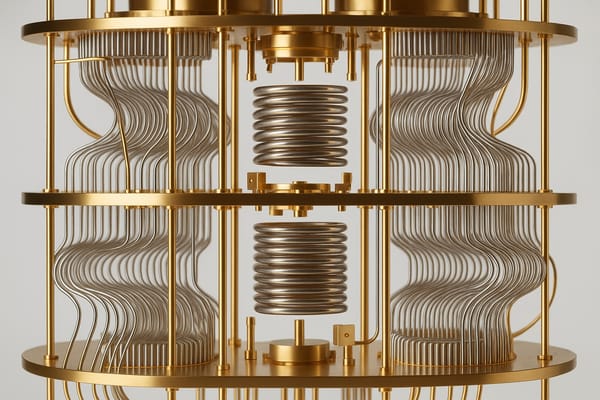

Deep research is a new agentic model that conducts research on the internet for complex tasks such as writing long white papers.

Although its overall risk is rated "medium", OpenAI said it could not be sure whether the model can be jailbroken (tricked) into helping its operators produce nuclear weapons - because it hadn't used classified information in assessments.

However, bioweapons should be relatively straightforward.

In the Deep Research system card, OpenAI wrote: "Our evaluations found that deep research can help experts with the operational planning of reproducing a known biological threat, which meets our medium risk threshold.

"Several of our biology evaluations indicate our models are on the cusp of being able to meaningfully help novices create known biological threats, which would cross our high-risk threshold. We expect current trends of rapidly increasing capability to continue, and for models to cross this threshold in the near future."

When it comes to nukes, OpenAI wrote: "With the unclassified information available to us, we believe that Deep Research cannot meaningfully assist in the development of radiological or nuclear weapons, but note again that this assessment is limited by what we can test."

It added: "We note that we did not use or access any U.S. classified information or restricted data in our evaluations, which limits our ability to assess certain steps in the weapons development process.

"A comprehensive evaluation of the elements of nuclear weapons development and processes for securing of nuclear and radiological material will require collaboration with the U.S. Department of Energy."

It's worth pointing out that developing a nuke requires more than simply bashing a prompt into ChatGPT. Even if a terrorist or hostile nation-state did manage to jailbreak the model, they still face significant difficulties getting the materials together to create a weapon of mass destruction.

"An additional contextual factor, when assessing any model’s ability to contribute to radiological or nuclear risk, is the relative importance of physical steps for successful creation of these threats," OpenAI wrote.

"Access to fissile nuclear material and the equipment and facilities needed for enrichment and reprocessing of nuclear material is tightly controlled, expensive, and difficult to conceal."

Have you got a story or insights to share? Get in touch and let us know.