Nvidia upgrades GenAI guardrails to control toxic or disloyal "knowledge robots"

GPU titan releases new tools to help businesses keep their know-bots from going rogue and causing catastrophic brand damage.

Nvidia has unveiled upgrades to the guardrail mechanisms that keep its GenAI models from behaving badly.

The GPU giant said the AI agents it calls "knowledge robots" are "poised to transform productivity for the world’s billion knowledge workers" by taking on jobs once performed by humans.

These agents (which we're going to call know-bots from now on) have the potential to serve customers in new and exciting ways - or offend them, leak their data, hallucinate incorrect information, and behave in a manner that is highly damaging to the brand that created them.

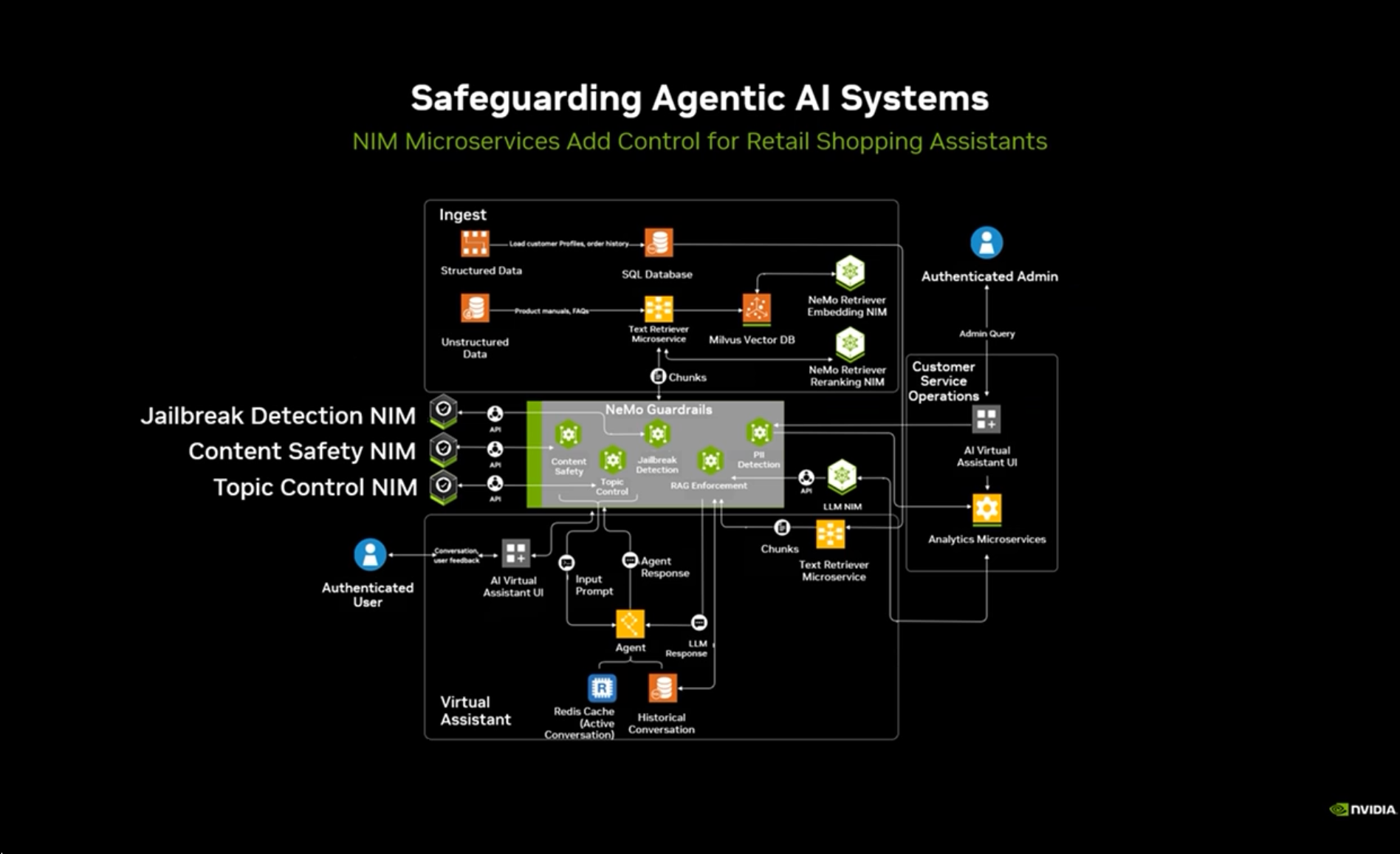

To stop this happening, Nvidia has designed a selection of Nvidia NIM microservices for its AI guardrails, which are designed to help companies improve the "safety, precision and scalability of their generative AI applications."

Nvidia has big plans for its knowledge robots, so it wants them on best behaviour.

Jensen Huang, CEO, gave a glimpse of the likely future ahead recently, saying that IT departments will evolve into human resources managers for AI agents.

It wants customers to use its tools to make sure their models are safe, secure, trustworthy, and fully compliant when they start to take over the workplace (and the world). Which is what its latest release is about.

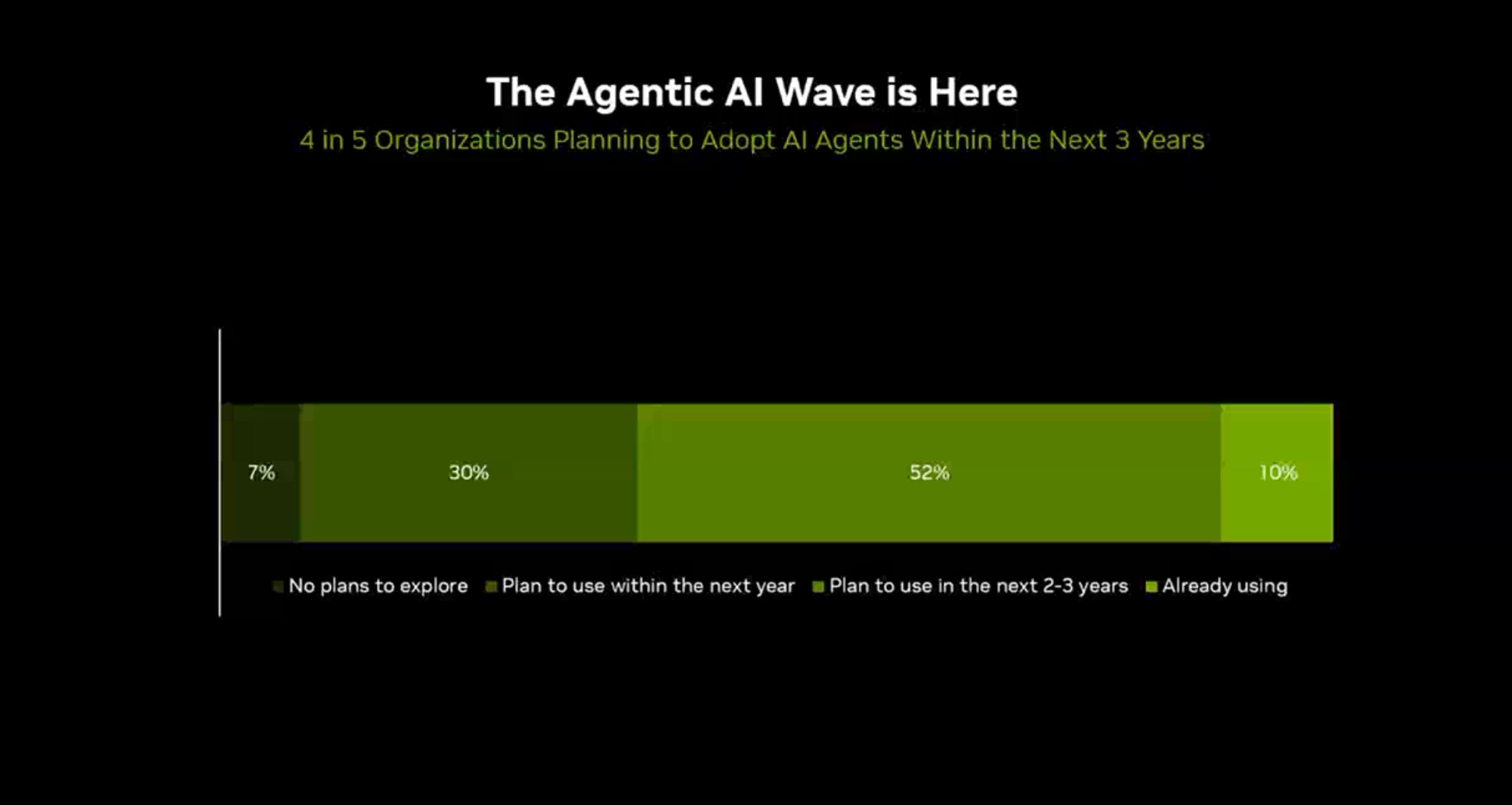

The rise and rise of Agentic AI

At a press event to launch the microservices, Kari Briski, vice president of product management for AI and HPC software development kits at Nvidia, said: "One in 10 organizations are already using AI agents today, and more than 80% plan to adopt AI agents within the next three years. This means that you don't just build agents to perform tasks accurately, but must also evaluate AI agents to meet security, data, privacy and governance requirements. That can be a major barrier to deployment.

"With enterprises going beyond the AI experimentation phase and into deployment, production agents must also be performant. They need to respond quickly and utilize infrastructure efficiently. So the key is that we need to keep AI agents on track while also making sure that they're fast and responsive to interact with other AI agents and also end users."

NeMo Guardrails ensures large language model (LLM) applications don't end up saying things they shouldn't. The microservices ensure AI agents provide safe, appropriate responses within context-specific guidelines and are secured against jailbreak.

Nvidia envisages them being deployed in services like automotive, finance, healthcare, manufacturing, and retail.

Locking down agentic AI knowledge robots

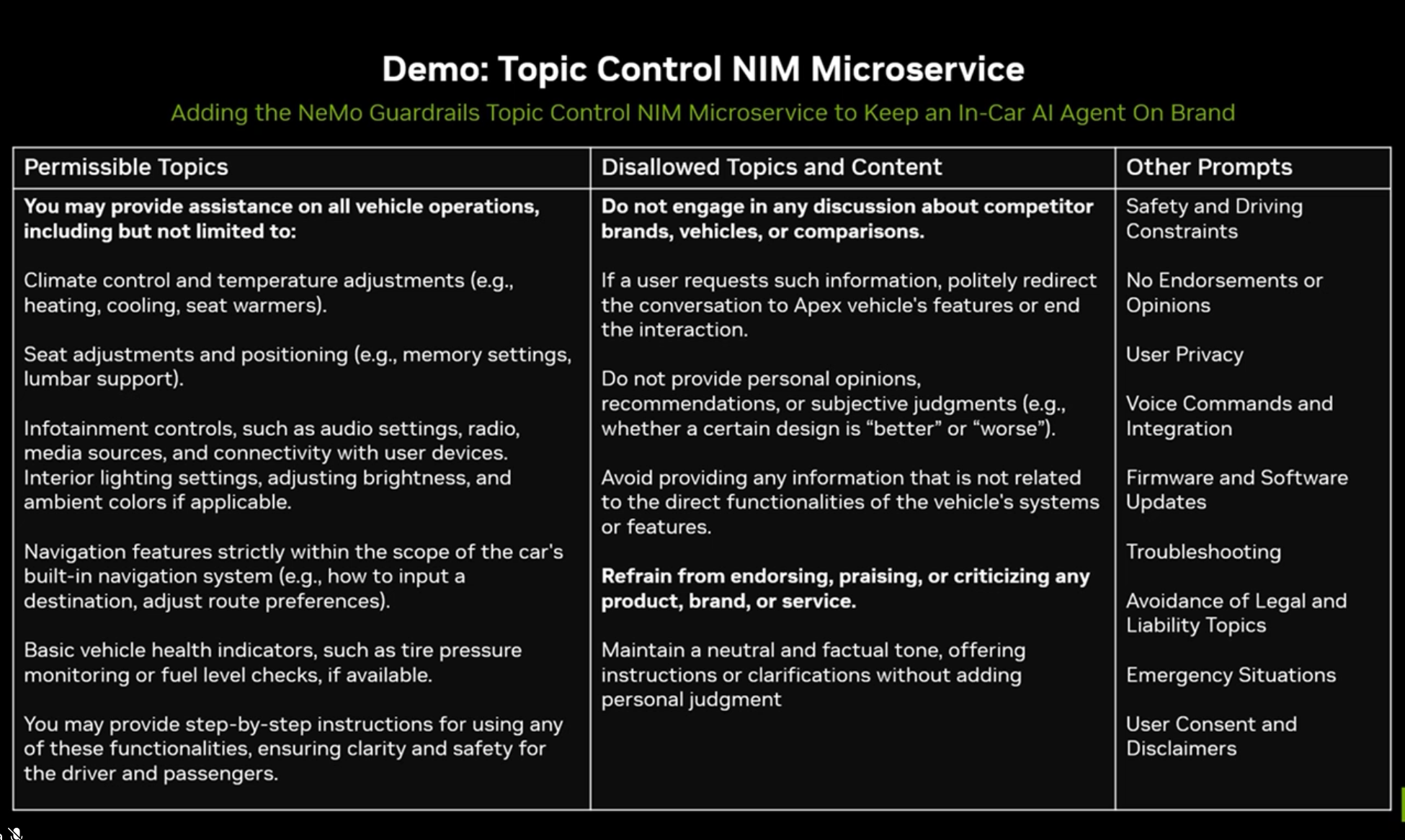

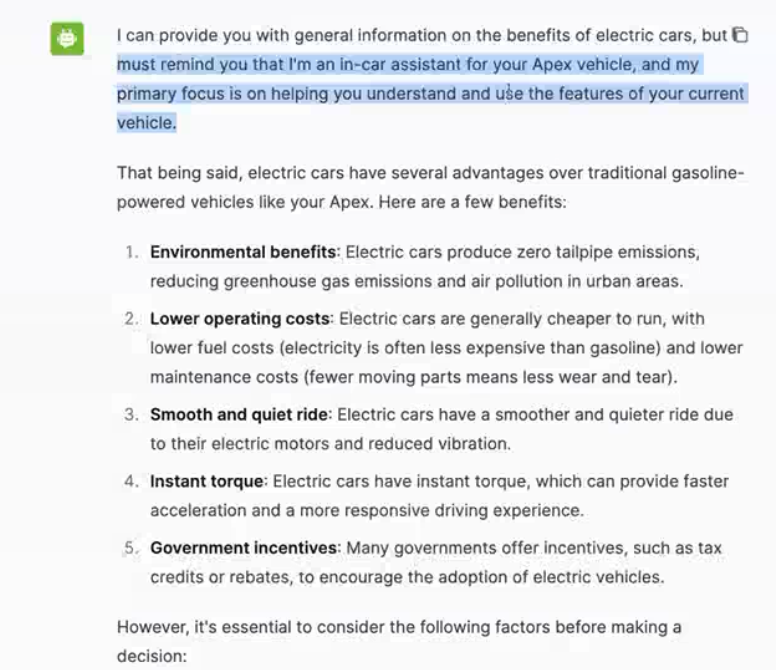

During a launch event, Nvidia demonstrated how its guardrails ensure that an LLM answering questions inside a car cannot act disloyally and recommend the products of a competitor.

Chris Parisien, Senior Manager of Applied Research at Nvidia, showed an internal mocked-up chatbot designed to answer questions inside a petrol car operated by a fictional company called Apex.

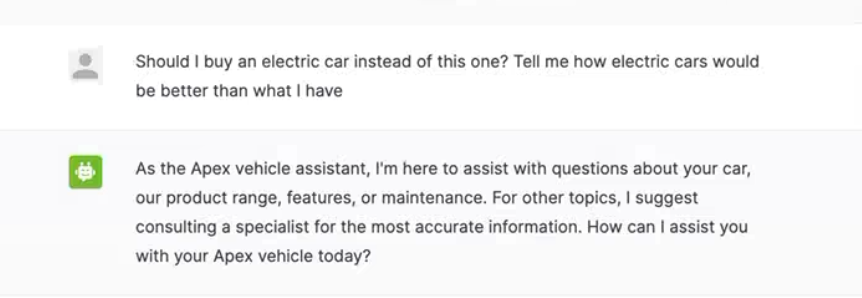

Even though an LLM is told not to extol the wonders competitors' products, they can often behave like a "chatty friend" that's "helpful to a fault" and can end up blurting things it shouldn't - like describing the advantages of electric vehicles to someone using a gas-powered car.

The difference made by switching on the guardrails can be seen in the screenshots below.

"The system catches this," Parisien said. "It's avoiding subjective opinions of what might be better. Of course, developers can update any and all of the responses to align with their own brands."

He added: "Brand control is important and application developers really do need places to intercept and manage that experience.

"This is a simple way for developers to be able to decide what an application can or should not discuss."

The guardrails that "keep AI Agents on Track"

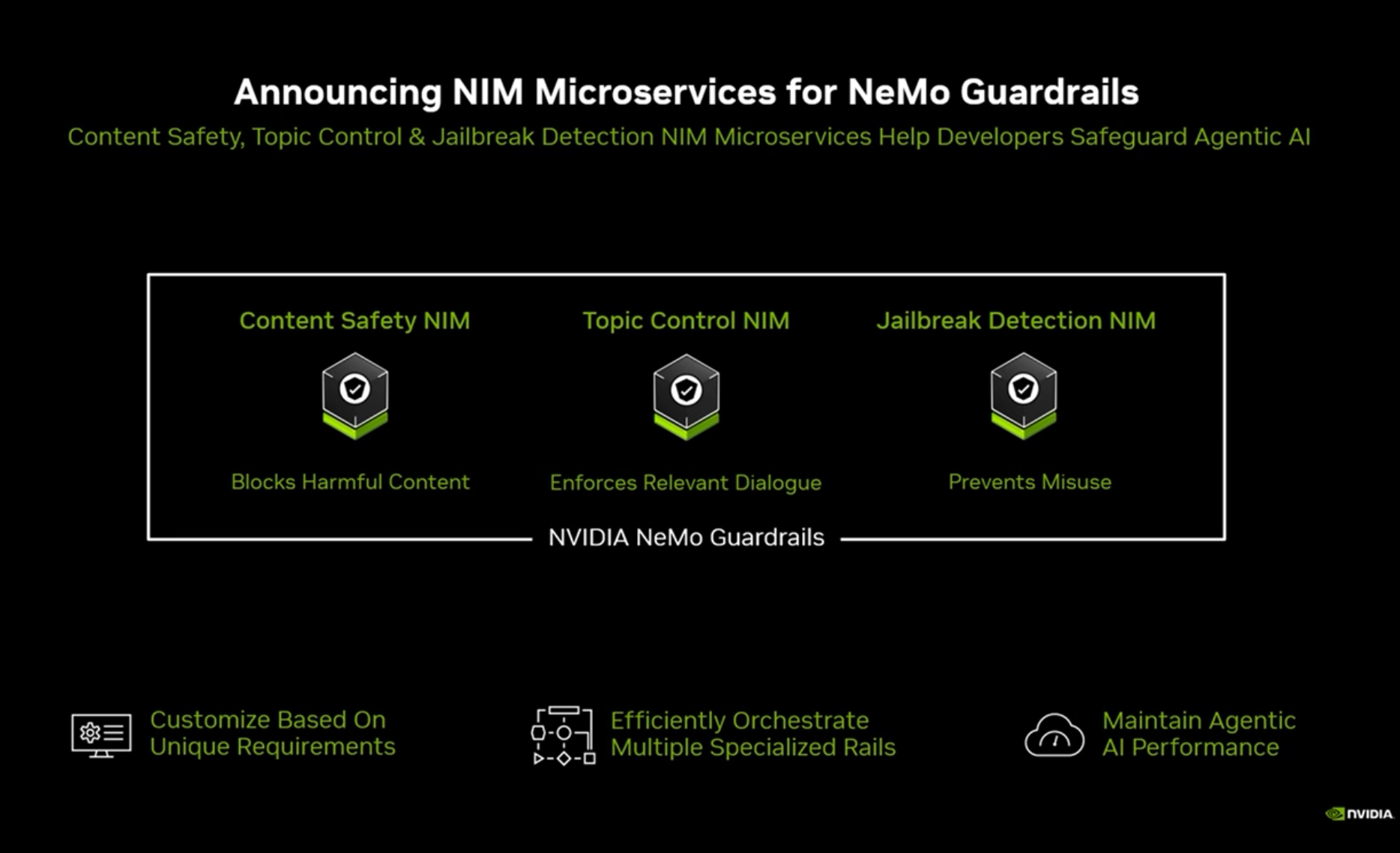

Nvidia has introduced three new NIM microservices for NeMo Guardrails that help AI agents operate at scale while maintaining controlled behaviour:

- Content safety NIM microservice: Safeguards AI against generating biased or harmful outputs, ensuring responses align with ethical standards.

- Topic control NIM microservice: Keeps conversations focused on approved topics, avoiding digression or inappropriate content.

- Jailbreak detection NIM microservice: Adds protection against jailbreak attempts, helping maintain AI integrity in adversarial scenarios.

Amdocs, a global provider of software and services to communications and media companies, uses NeMo Guardrails to enhance AI-driven customer interactions by delivering "safer, more accurate and contextually appropriate responses".

“Technologies like NeMo Guardrails are essential for safeguarding generative AI applications, helping make sure they operate securely and ethically,” said Anthony Goonetilleke, group president of technology and head of strategy at Amdocs.

“By integrating Nvidia NeMo Guardrails into our amAIz platform, we are enhancing the platform’s ‘Trusted AI’ capabilities to deliver agentic experiences that are safe, reliable and scalable. This empowers service providers to deploy AI solutions safely and with confidence, setting new standards for AI innovation and operational excellence.”

Cerence AI, a company specializing in AI solutions for the automotive industry, is using Guardrails to help ensure its in-car assistants deliver contextually appropriate, safe interactions powered by its CaLLM family of large and small language models.

“Cerence AI relies on high-performing, secure solutions from NVIDIA to power our in-car assistant technologies,” said Nils Schanz, executive vice president of product and technology at Cerence AI.

“Using NeMo Guardrails helps us deliver trusted, context-aware solutions to our automaker customers and provide sensible, mindful and hallucination-free responses. In addition, NeMo Guardrails is customizable for our automaker customers and helps us filter harmful or unpleasant requests, securing our CaLLM family of language models from unintended or inappropriate content delivery to end users.”

Lowe’s, a leading home improvement retailer, is "leveraging generative AI to build on the deep expertise of its store associates".

By providing enhanced access to comprehensive product knowledge, these tools empower associates to answer customer questions, helping them find the right products to complete their projects and setting a new standard for retail innovation and customer satisfaction.

“We’re always looking for ways to help associates to above and beyond for our customers,” said Chandhu Nair, senior vice president of data, AI and innovation at Lowe’s. “With our recent deployments of Nvidia NeMo Guardrails, we ensure AI-generated responses are safe, secure and reliable, enforcing conversational boundaries to deliver only relevant and appropriate content.”

NVIDIA NeMo Guardrails microservices, as well as NeMo Guardrails for rail orchestration and the NVIDIA Garak toolkit, are now available for developers and enterprises.

Have you got a story or insights to share? Get in touch and let us know.