Meta is using AI to make NPCs more human, less robotic and hopefully not quite as ridiculous

Patent application reveals plans to give non-player characters the ability to speak and behave realistically using LLMs and GenAI.

In video games, non-player characters (NPCs) are known for engaging in stilted conversations, glitchily walking into walls and possessing a level of intelligence closer to that of a housefly than a human.

Now the company formerly known as Facebook has revealed plans to use AI to make NPCs behave more like real people, rather than half-witted automatons.

Before we start, it's worth mentioning that the exact meaning of NPC has changed over the years.

Today, the term NPC refers to a real person who mindlessly follows trends, repeats mainstream opinions and totally lacks independent thought, with their speech and arguments resembling the pre-programmed responses of auxiliary characters in games. The image at the top of this article is associated with real-life NPCs.

Yet to gamers, the acronym refers to any character controlled by the game rather than the player.

Although NPCs typically follow scripted behaviours and dialogue, most are now blessed with some form of rudimentary AI to allow a degree of spontaneous, human-like action.

But they're not always particularly convincing...

Why is Meta reinventing the NPC?

Machine has uncovered a new patent application from Meta which sets out a system designed to make NPCs in VR and the Metaverse act more realistically by using large language models (LLMs) to power convincing conversations and behaviour.

The patent protects Meta’s method of training and deploying adaptive NPCs in virtual environments, letting them learn and adapt from user behaviour, recall interactions, build relationships, and tailor their tone or response style based on their current engagement with the player.

The problem with NPCs, Meta states, is that they are not able to handle "out of domain" (OOD) requests, which are beyond the bounds of their rather limited programming. When confronted with an unfamiliar situation, the characters simply break down and say things like: "Sorry, I can't do that."

This can "break immersion" and remind players of the simulated nature of the virtual reality they are exploring, Meta warned.

"When fully immersed into a VR environment, users may want to interact with characters like they would with another human being in a real transactional environment," Meta wrote.

"By letting an LLM handle generating the responses, this approach saves resources spent needing to develop a complex dialogue tree. This approach also may mitigate key pain points in VR interactions and may improve the developer's ability to create authentic, immersive voice experiences centred around the ubiquitous NPC, which are staples of games and app experiences."

Moving beyond scripted dialogue

In the past, entire "dialogue trees" needed to be created in order to give NPC's stuff to talk about - placing obvious limits on the topics they could discuss.

GenAI, LLMs and techniques such as natural language processing (NLP) can overcome this obstacle by generating responses in real time which actually respond to the events going on around the player. This should stop the ridiculous scenarios in which NPCs are happily chatting about the wares they have for sale, whilst behind them a huge dragon burns the city to the ground and kills everyone they've ever known.

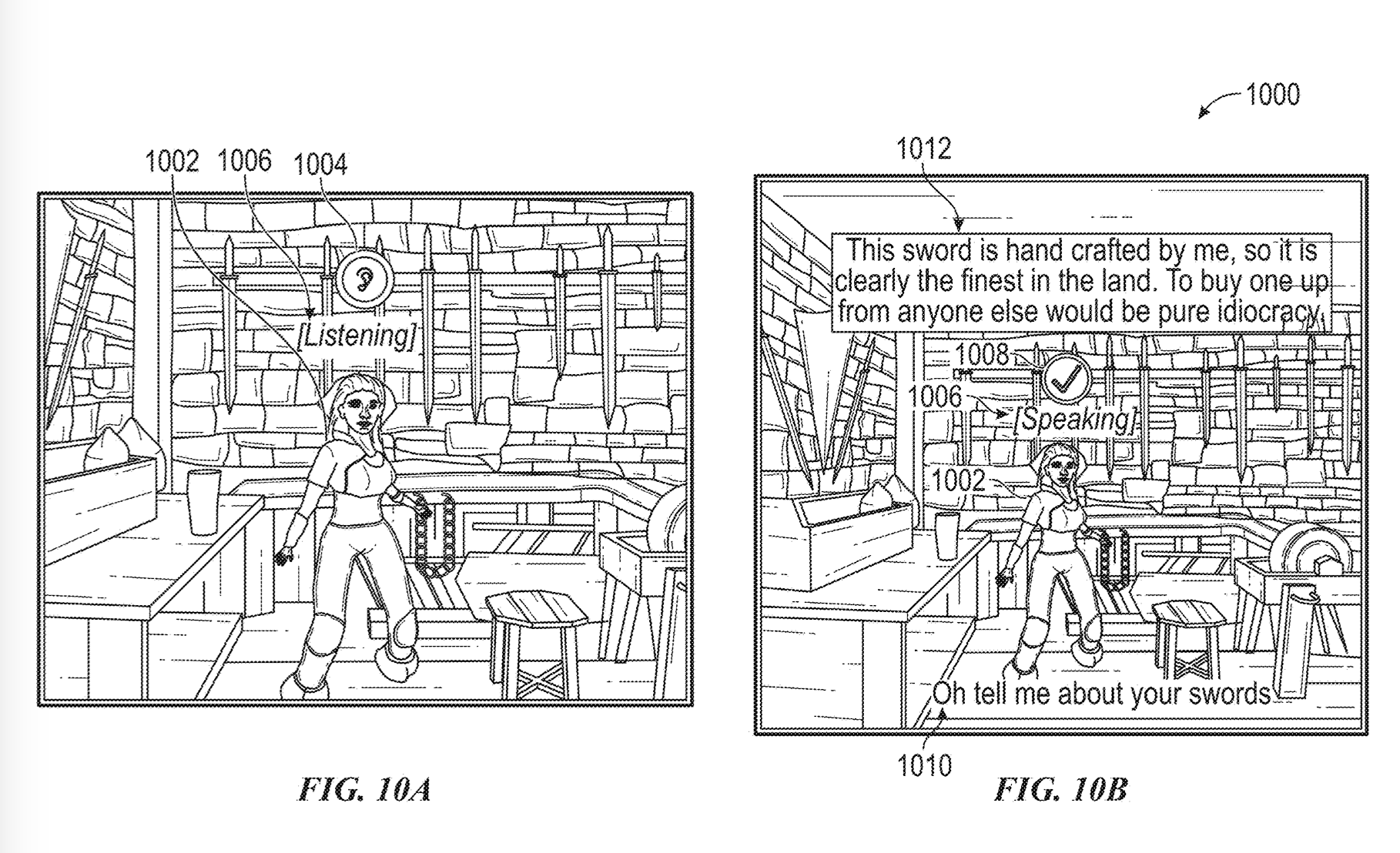

"A shopkeeper NPC may help facilitate in-app transactions and purchases," Meta added. "Through enabling generative responses, the interaction with the shopkeeper NPC would be given more depth and personality to the NPC while still allowing for pre-coded responses to purchasing requests. By doing so, this interaction and many others like it may mirror real-world situations, such as a shopkeeper taking orders and making chitchat with a customer while fielding questions they may not have context to answer."

“Where do I find the secret lair of the lich king?”

An example of rudimentary NPC design

The patent contains details of a toolkit which lets software developers add NPC agents to their experiences, which can converse with users using dynamically generated natural language whilst responding predictably to predetermined verbal cues.

Using GenAI, NPCs can be given emotions and change their behaviour based on their mood.

Meta continued: "This allows for real conversations with the NPC that go beyond pre-programmed dialogue trees. The LLM may generate in-game actions for NPCs. The responses from the LLM can be fed back into the natural language interface to cause the in-game action to happen."

This toolkit contains a "perception system" and even allows for NPCs to show the player whether they are listening and paying attention or totally ignoring them.

"Since these characters exist within worlds which contain more than a simple conversation, knowledge of those goings-on is provided to the LLM," Meta wrote. "It would easily break immersion for a character to respond identically to a player whether the player simply approached them calmly or approached them whilst discharging a series of firearms or swinging about a sword."

NPCs can be given "stage direction" to offer responses which move the game's story forward. They can be programmed to be seductive or flirtatious, as well as viciously aggressive, or be prompted to ask questions which drive forward "intent" and push gamers to take action, such as: “Where do I find the secret lair of the lich king?”

World knowledge and learning from experience

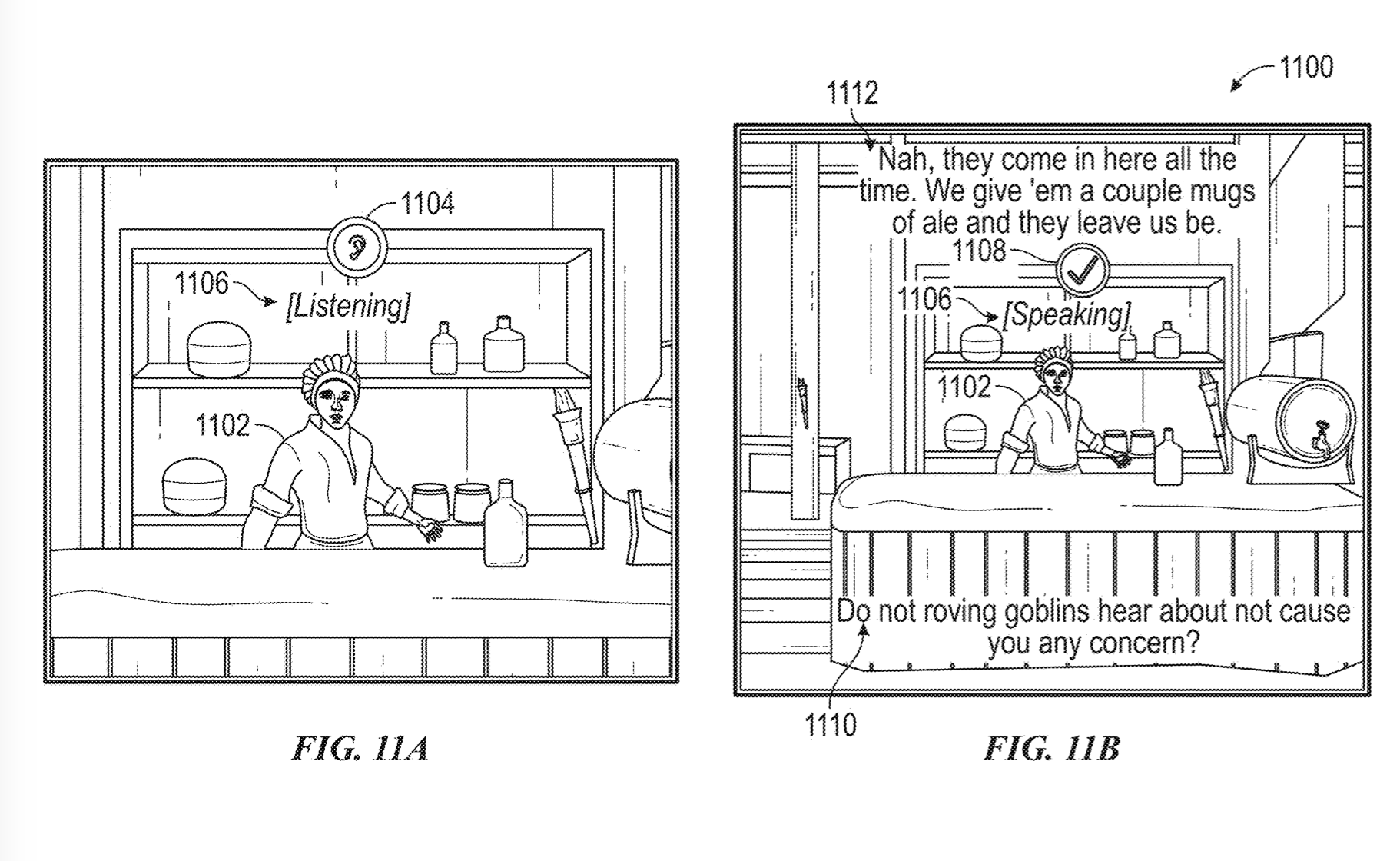

Meta gave lots of cool examples of how its NPCs might respond in future.

One is a "barkeep" who can respond dynamically to questions such as: "Do the roving goblins here about not cause you any concern?".

The same barkeeper is also capable of seeing that the player is wielding their weapon whilst enjoying an ale before saying: "Be careful with your sword!"

The LLMs which power conversations can be trained on material such as screenplays to make their dialogue more convincing and given "world-level" knowledge of their environment, as well as the flexibility to respond to events as they happen.

Examples of this knowledge could include factoids such:

- "There exist dragons of multiple colours in the world, and they are generally not friendly."

- "The bakery named Susan's Sweetmeats is in the city of Mubarrak."

- "Susan has no children and is very happy about it."

Which sounds very impressive and probably very funny, as the new characters will probably have an even wider range of quirks than the older ones. We'll look forward to seeing the next generation of AI-powered NPCs and hope they're as loveable as the old ones.

For some reason, links to the US Patent Office don't work. To find this patent, visit the USPTO website and search for patent number 20250037391 (the full reference is US-20250037391-A1).

Have you got a story or insights to share? Get in touch and let us know.