Is the UK finally going to ban under-16s from social media?

Parliament prepares to debate petition to increase the age limit for joining X, Meta, TikTok and other platforms.

MPs are preparing to debate a petition calling for kids aged less than 16 years old to be banned from using social media.

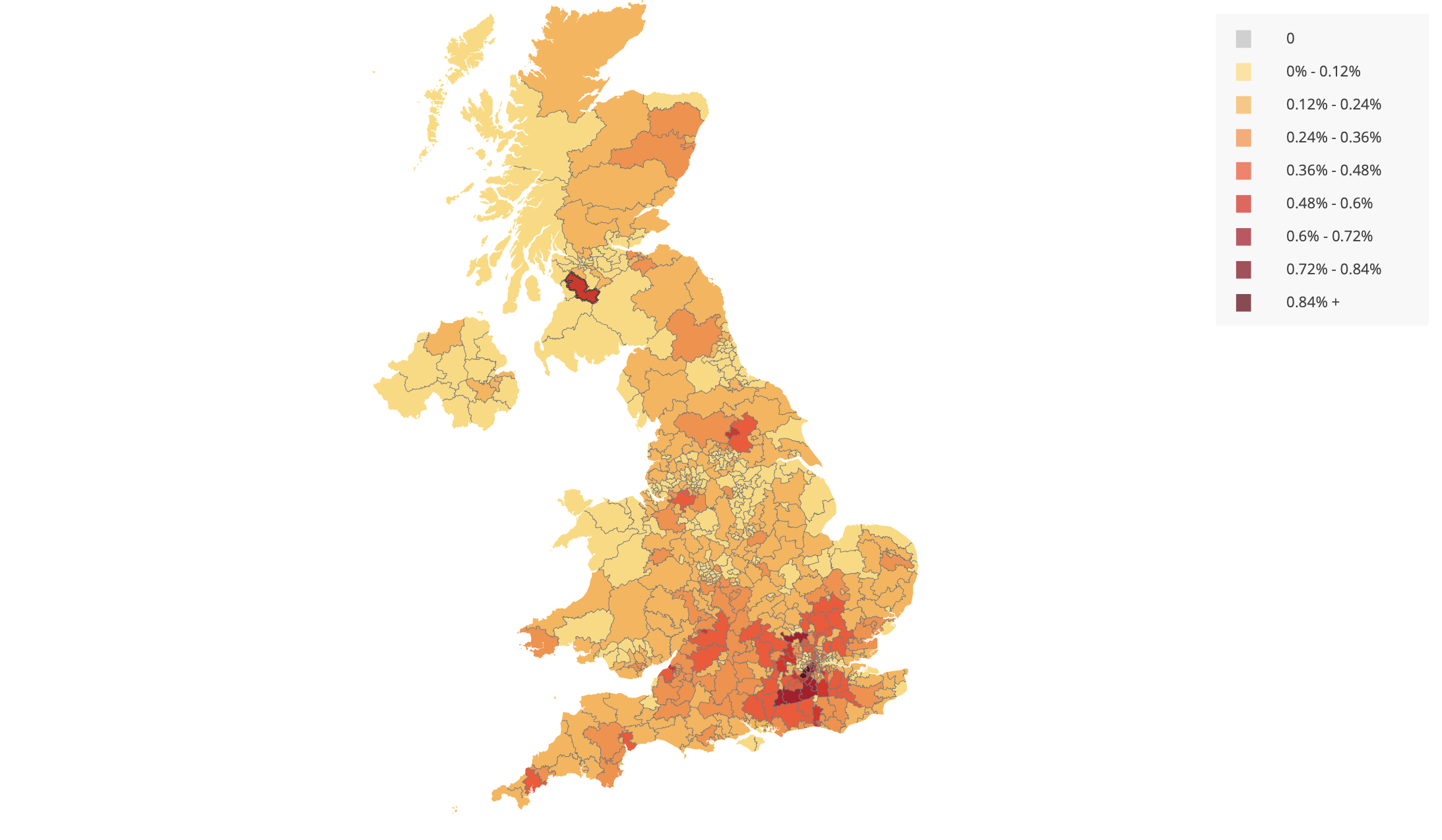

More than 127,000 people have signed a campaign calling for an urgent increase to the minimum age at which children can open up profiles on platforms like X or TikTok.

"We believe social media is having more of a negative impact to children than a positive one," the petitioner wrote. "We think people should be of an age where they can make decisions about their life before accessing social media applications.

"We believe that we really need to introduce a minimum age of 16 to access social media for the sake of our children’s future along with their mental and physical health."

The petition states that keeping kids off socials would help to stop online bullying, prevent children from "being influenced by false posts," and make sure they don't see content that encourages violence or "could be harmful for their future".

So far, the Labour government has refused to heed the calls.

On November 20, 2024, technology secretary Peter Kyle said a possible ban on social media for under-16s was "on the table". Two days later, Kyle changed his mind and stated that a change was " not on the cards".

When will MPs debate the social media age limit petition?

In December 2024, the government responded to the petition and wrote: "The government is aware of the ongoing debate as to what age children should have smartphones and access to social media. The government is not currently minded to support a ban for children under 16."

The Petitions Committee then stepped in to force a better response after finding the government "did not respond directly to the request".

In its revised response, the government wrote: "Children face a significant risk of harm online and we understand that families are concerned about their children experiencing online bullying, encountering content that encourages violence, or other content which may be harmful.

"We will continue to do what is needed to keep children safe online. However, this is a complicated issue. We live in a digital age and must strike the right balance so that children can access the benefits of being online and using smartphones while we continue to put their safety first. Furthermore, we must also protect the right of parents to make decisions about their child’s upbringing."

It said the current evidence on screentime was "mixed" and that a review by the UK Chief Medical Officers in 2019 did not, for some inexplicable reason, find a causal link between "screen-based activities and mental health problems". However, it did admit other studies found a link with anxiety or depression.

Because it has reached 100,000 signatures, the petition will be debated on 24 February 2025 in Westminster Hall.

Labour, censorship and the joys of regulation

Last month, the government commissioned a feasibility study into future research to understand the ongoing impact of smartphones and social media on children. In other words, it launched an inquiry about whether to launch an inquiry.

In the meantime. Labour is committed to implementing the controversial Online Safety Act 2023.

"The Act puts a range of new duties on social media companies and search services, making them responsible for their users’ safety, with the strongest provisions in the Act for children," the government said.

"Social media platforms, other user-to-user services and search services that are likely to be accessed by children will have a duty to take steps to prevent children from encountering the most harmful content that has been designated as ‘primary priority’ content.

"This includes pornography and content that encourages, promotes, or provides instructions for self-harm, eating disorders, or suicide. Online services must also put in place age-appropriate measures to protect children from ‘priority’ content that is harmful to children, including bullying, abusive or hateful content and content that encourages or depicts serious violence."

Under the Act, services that set minimum age limits must specify how these are enforced and ensure they do so consistently.

Social companies regulated by the Act must "take steps to protect all users from illegal content and criminal activity on their services".

"We will continue to work with stakeholders to balance important considerations regarding the safety and privacy of children," the government promised.

The Online Safety Act 2023 is controversial due to concerns about censorship, potential free speech restrictions and privacy risks. It grants broad powers to Ofcom, which could lead to over-sensitive removal of lawful content, and contains a "spy clause" that could mandate message scanning that threatens encryption. Platforms face steep fines, encouraging excessive moderation, which could harm journalism and political discourse.

Critics argue it gives the government too much control over online speech, raising concerns about bias and overreach as well as fears that it could stifle public debate.

This story is being updated. Please check back later for further comment and insights or get in touch to share your thoughts at the address below.

Have you got a story or insights to share? Get in touch and let us know.