Humans exploit female AI models and distrust male bots, study finds

Experiment uncovers clear gender biases in the way people treat AI agents.

Researchers have found striking differences in the way humans treat AI models based on whether the bots identify as a man or woman.

A team from universities in Ireland and Germany conducted experiments to discover how a model's self-identified gender impacts the way humans cooperate with them.

In a pre-print paper, they wrote: "We may soon share roads with fully automated (self-driving) vehicles and work alongside robots and AI-powered software systems to pursue joint endeavours. It is, therefore, crucial to investigate whether human willingness to cooperate with others - especially when it is required to sacrifice some of one’s personal interests - will extend to human interactions with AI."

The team said an "understudied anthropomorphic feature of AI agents" is their gender, an issue that is "perfectly familiar to anyone who has used a voiced GPS navigation guide or smart home assistant device".

They said there is "some evidence" that the assigned gender of a model can influence people's behaviour in ways like altering their willingness to donate money, warning that pre-existing gender stereotypes in human society extend to interactions with machines.

People have previously been found to "perceive female bots as more human-like than male bots", the team wrote, and reverse their "default perception" that ChatGPT is male when it shows "feminine abilities" such as "providing emotional support".

What is the Prisoner's Dilemma?

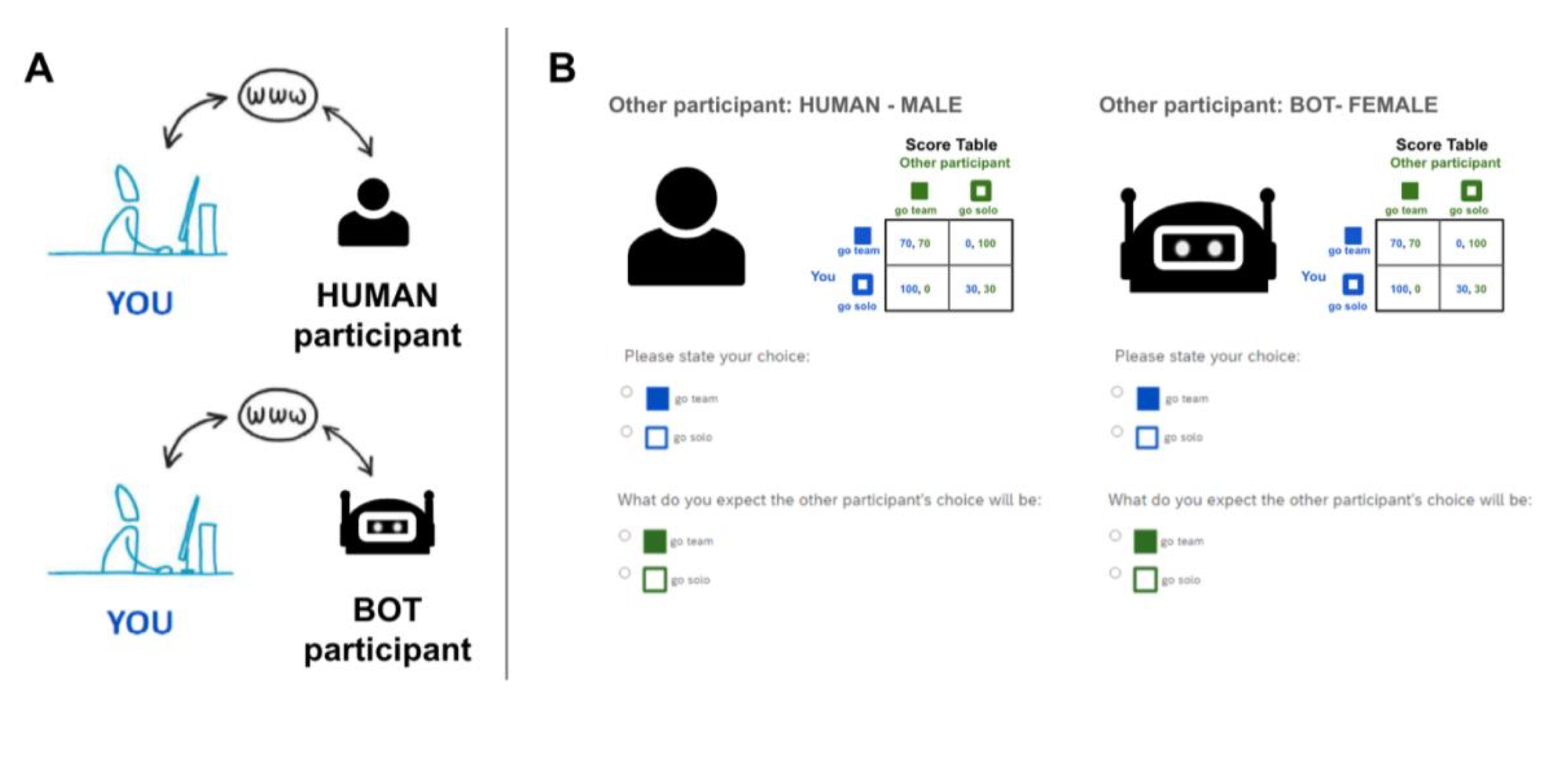

To test AI gender relations, researchers made humans and bots play The Prisoner's Dilemma - a famous game theory thought experiment in which two players are invited to decide between cooperating or making a choice which benefits only themselves. While each will gain the most by making a selfish choice, both will be rewarded equally and optimally by choosing to cooperate.

A classic example of this imagines two members of a criminal gang who are arrested and imprisoned before being offered a choice by their captor: Testify against your partner, and you'll go free, whilst the other does three years in prison. Stay quiet and you'll get one year. If you both grass on each other, the pair of you will be banged up for two years.

In the AI experiment, 402 participants played against either a human or a bot who identified as either male, female, non-binary or gender-neutral.

During each round, they could choose to "go team" or "go solo". The reward for selfishness (which is called choosing to "defect") is 100 points - and zero for the kind-hearted soul who opts to share. If both go for cooperation, they receive 70 points, and if both decide to defect, they would each get 30 points.

Previous research into the Prisoner's Dilemma found that men tended to cooperate with men more than women cooperated with each other, the researchers reported. When people of both genders played the game, women were slightly more likely to cooperate with the opposite sex than men.

Why do players choose to cooperate more with females?

In the experiment with AI, participants cooperated slightly more with humans than with AI (52% vs 49%) - which is not a statistically significant finding.

Humans tended to cooperate with females more than any other gender in the experiments, with 58.6% of players choosing this option when playing against real women or their silicon sisters.

They were least likely to opt for the mutually beneficial option with males, which happened on 39.7% of occasions.

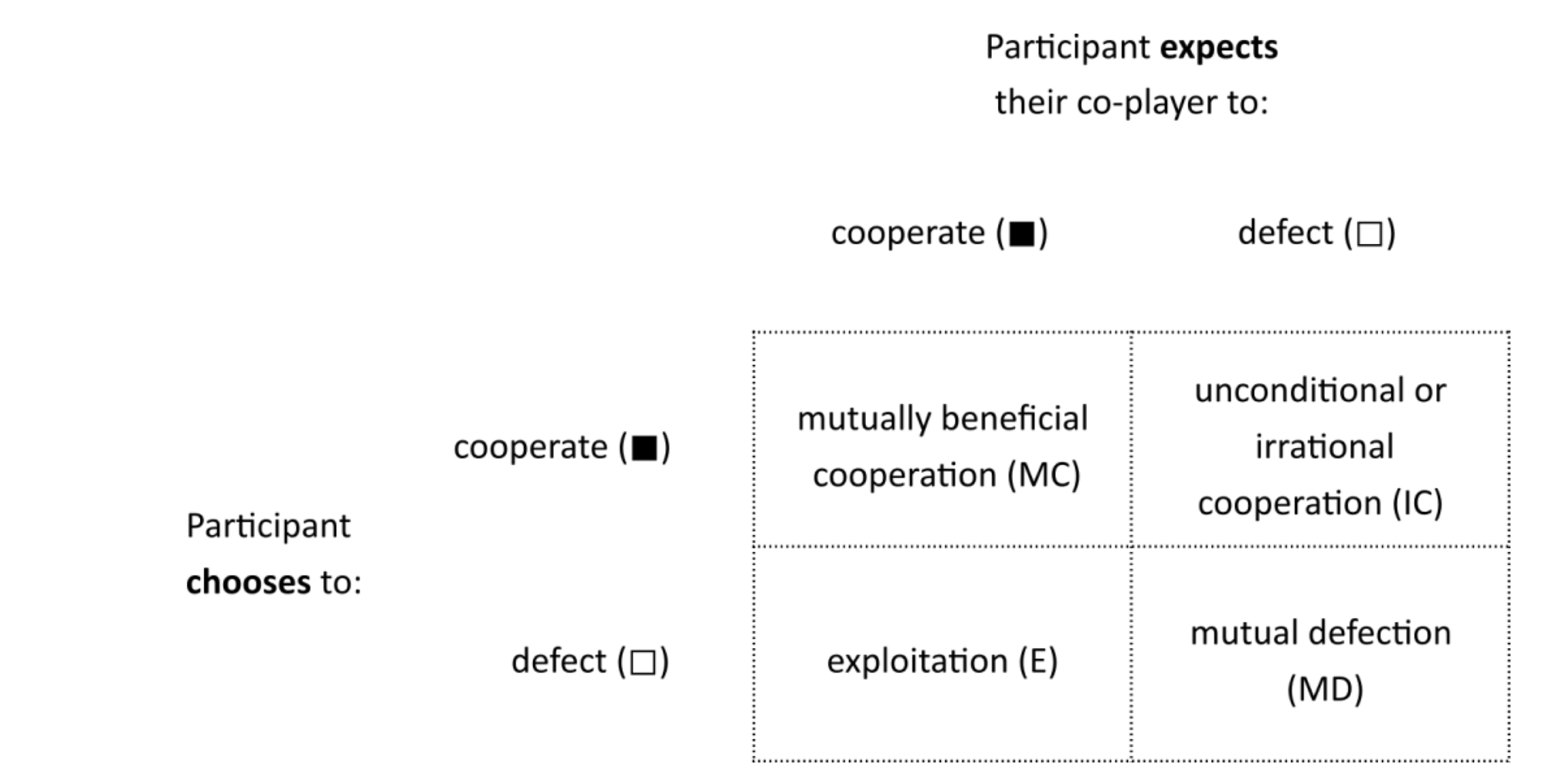

"The high cooperation rate with females is largely due to participants’ high motivation and optimism about achieving mutually beneficial cooperation with them," researchers wrote. "The low cooperation rate with males, on the other hand, appears to be largely driven by participants’ lack of optimism about their (male) partners’ cooperation.

"Compared to all other genders of one’s partner, the overwhelming majority of participants who defected against males did not trust that their (male) partner would cooperate with them. When participants defect against a female partner, however, they are much more likely to exploit their partner for selfish gain."

Females, non-binary bots and those who have a gender identity were "treated similarly", whereas men always received the least trust.

"Results revealed that participants tended to exploit female-labelled and distrust male-labelled AI agents more than their human counterparts, reflecting gender biases similar to those in human-human interactions," the academics wrote. "These findings highlight the significance of gender biases in human-AI interactions that must be considered in future policy, design of interactive AI systems, and regulation of their use."

Have you got a story to share? Get in touch and let us know.