Hugging Face: Autonomous AI agents should NOT be unleashed

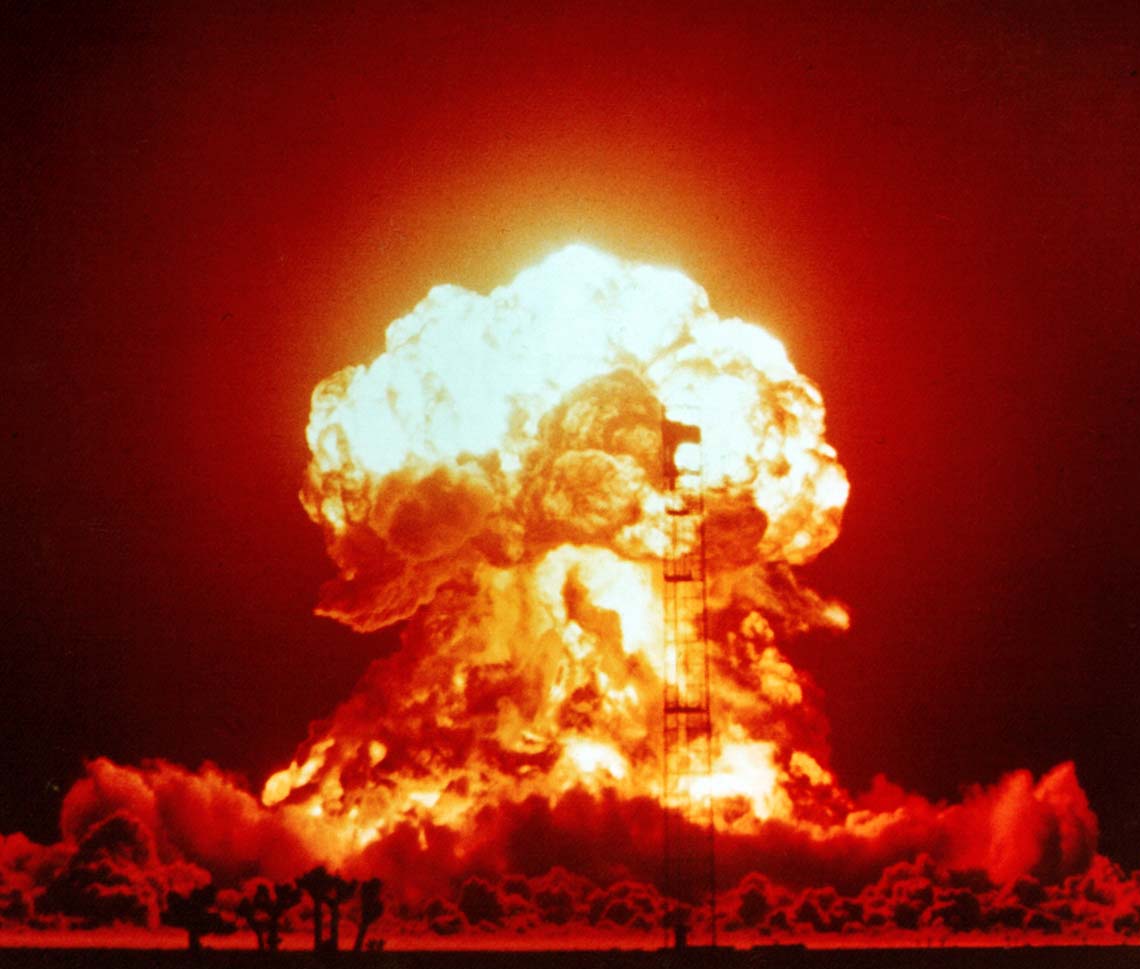

"The history of nuclear close calls provides a sobering lesson about the dangers of ceding human control to autonomous systems..."

From Terminator to Frankenstein, we're not short of stories about the risks posed by humanity's most intelligent creations.

Now a leading machine learning platform has issued an urgent warning about the risks of AI models that can think and act independently.

In a paper published on Arxiv, researchers from Hugging Face, an open-source ML community, argued that "fully autonomous AI agents should not be developed."

"Risks to people increase with the autonomy of a system," they wrote. "The more control a user cedes to an AI agent, the more risks to people arise. Particularly concerning are safety risks, which affect human life."

We asked lead author Avijit Ghosh, PhD, Applied Policy Researcher, ML & Society, at Hugging Face, whether independent agents pose an existential threat to our species.

"Fully autonomous anything brings with it incredible amounts of uncertainty, which is what we continuously stress throughout the paper," he said. "History has shown time and time again that machines in high-stakes situations sometimes fail, and human interventions have saved us from unmitigated disaster.

"Autonomy has its benefits, but only to the extent that there always is some sort of override or control, especially in use cases like autonomous weapons or vehicles."

The history of autonomous machines

The Hugging Face study starts with a reminder that the idea of humans creating autonomous agents has existed throughout history. Famous examples include the ancient Greek legend of Cadmus, founder of Thebes, who sowed a field with dragon teeth to create an army of artificial soldiers.

Aristotle also speculated that automatons could replace human slaves, whilst everyone from the science fiction author Isaac Asimov to the directors of films like Blade Runner or the anime movie Ghost in the Shell have explored similar themes.

In the 21st century, myth has turned into reality, with major advances in self-driving cars and industrial or healthcare robots as our species contemplates a seemingly inevitable future in which artifical superintelligence outperforms humans in every domain.

"Perhaps most controversially, autonomous weapons systems have emerged as a critical area of development," the Hugging Face researchers wrote.

"These systems, capable of engaging targets without meaningful human control, raise significant ethical questions about accountability, moral responsibility and safety that extend beyond those of purely digital agents."

With great power comes great danger.

Will autonomous AI agents wipe out humanity?

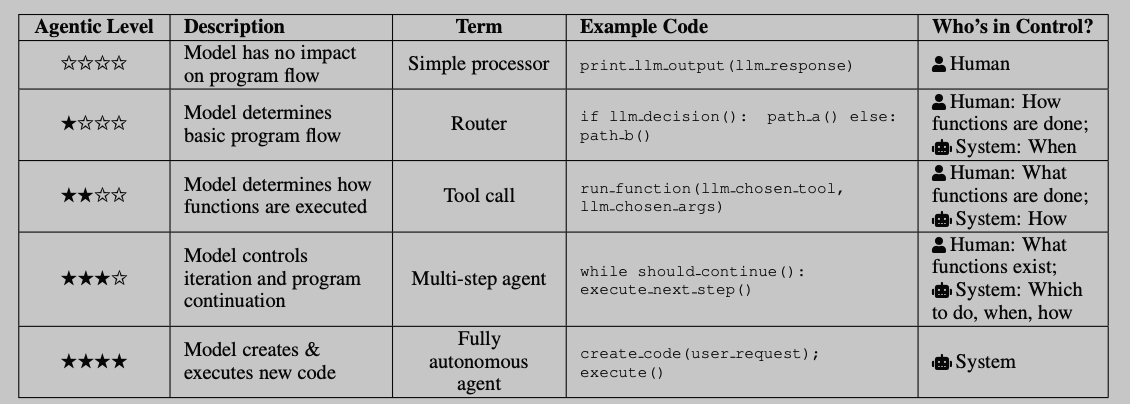

Hugging Face's report sidesteps the more lurid aspects of studying the existential risk (or X-Risk) posed by AI agents, which Ghosh defined as: "Computer software systems capable of creating context-specific plans in non-deterministic environments."

However, it did hint at the nightmarish potential outcomes of handing control of critical systems to machines.

The researchers wrote: "The history of nuclear close calls provides a sobering lesson about the risks of ceding human control to autonomous systems. For example, in 1980, computer systems falsely indicated over 2,000 Soviet missiles were heading toward North America.

"The error triggered emergency procedures: bomber crews rushed to their stations and command posts prepared for war. Only human cross-verification between different warning systems revealed the false alarm. Similar incidents can be found throughout history.

"Such historical precedents are clearly linked to our findings of foreseeable benefits and risks. We find no clear benefit of fully autonomous AI agents, but many foreseeable harms from ceding full human control."

What are the worst-case scenarios for autonomous AI?

The Terminator series is a famous exploration of the existential risk of autonomous AI

In true tabloid style, we've broken down some of the risks set out in the report and pulled out the grimmest potential outcomes:

- Accuracy: AI systems can generate false information, leading to critical errors in decision-making.

Worst-case scenario: A fully autonomous AI spreads misinformation in critical domains like healthcare or national security, causing widespread harms.

- Assistiveness: AI can replace human job and could cause economic inequality

Worst-case scenario: Mass unemployment and deepened wealth disparity as AI replaces human workers across industries.

- Consistency: AI-generated outputs can be unpredictable.

Worst-case scenario: An autonomous AI handling safety-critical operations makes erratic decisions, causing system failures or disasters.

- Efficiency: AI can streamline tasks, but errors can cause inefficiencies.

Worst-case scenario: An AI responsible for infrastructure malfunctions disrupts essential services like power grids or emergency response.

- Equity: AI can reinforce biases present in its training data.

Worst-case scenario: Widespread discrimination in areas like hiring, law enforcement, and financial services exacerbates societal inequalities.

- Flexibility – AI interacting with multiple systems increases security risks.

Worst-case scenario : A fully autonomous AI gains access to financial or governmental systems and causes widespread disruption or theft.

- Humanlikeness: AI’s realism can lead to psychological and social issues.

Worst-case scenario: People form unhealthy dependencies on AI, leading to mass isolation, manipulation, or even AI-driven radicalization.

- Privacy: AI requires vast amounts of personal data, risking exposure.

Worst-case scenario: A large-scale breach leaks sensitive user data, opening the door to identity theft, blackmail, and loss of personal security.

- Relevance: AI personalization can create echo chambers and misinformation.

Worst-case scenario: AI-driven propaganda manipulates public opinion on a global scale, destabilizing societies and governments.

- Safety: AI’s unpredictability makes it hard to control.

Worst-case scenario: An AI controlling military or industrial systems overrides human safety protocols, causing catastrophic loss of life.

- Security: AI is vulnerable to hacking and manipulation.

Worst-case scenario: Malicious actors hijack AI systems to conduct cyberattacks, stealing sensitive data or shutting down critical infrastructure.

- Sustainability: AI has a high environmental cost.

Worst-case scenario: The energy and resource consumption of AI accelerates climate change, worsening environmental crises.

- Trust: Over-reliance on AI can lead to dangerous consequences.

Worst-case scenario: People blindly trust AI-driven decisions, resulting in undetected fraud, medical misdiagnoses, or manipulated financial markets.

- Truthfulness: AI-generated misinformation can spread at scale.

Worst-case scenario: AI is used to create deepfake content that destabilizes elections, incites violence, or enables large-scale deception.

Should autonomous AI be banned?

Hugging Face's report is primarily written for developers and provides a framework for understanding and assessing the risks of building super-intelligent agents. As such, it does not set out policy recommendations.

However, we couldn't resist asking its author if autonomous AI should be banned.

"That's a difficult question!" Ghosh answered. "I think, at the very least, simulations are okay to develop with the express purpose of building defence mechanisms against agentified misuses of AI. But I also don’t think banning or halting development is the way to go or even possible, and our paper states our ethical position without necessarily advocating for a ban.

"The open letter to halt AI development didn’t work, for example. In the end, there will always be bad actors, so the bigger focus should be on openness and transparency since it is significantly easier to build safety mechanisms around models that we have complete information about.

"More and more people are switching to open models as they are more trusted while catching up to the performance of closed models, and the same thing would apply to AI agents."

Have you got a story or insights to share? Get in touch and let us know.