Invisible "face cloaking" camouflage can dodge facial recognition, defence contractor claims

Researchers discover a new way of protecting your identity with little more than small cosmetic touches.

A US defence firm has discovered a simple way of dodging facial recognition systems to protect identities in a "machine-readable world".

Common facial recognition systems already scrape pictures and other metadata from billions of social media feeds. If you've ever uploaded a picture to a non-anonymous profile, you can easily be identified from CCTV and then tracked down.

David A. Noever and Forrest G. McKee of Peopletec, a company that offers a wide range of "innovations for the evolving battlefield," invented a new technique that offers "face cloaking" without requiring a full disguise.

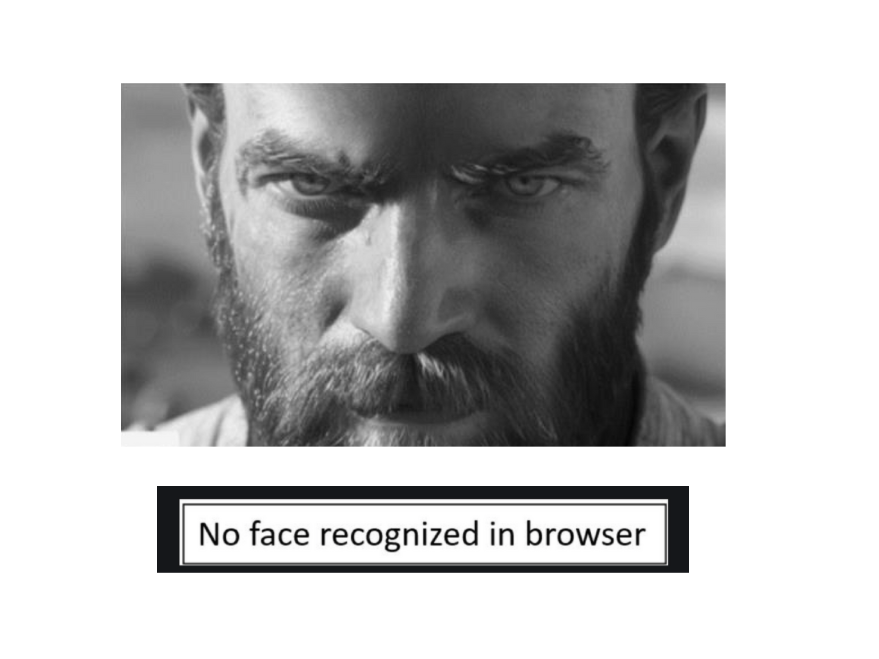

You can see the results at me at the top of this article, which uses a "novel camera camouflage method" to produce a picture that's easy for humans to recognise but flummoxes AI systems, confusing identification systems based on scanning faces.

Although it's probably too late for most people to dodge the global surveillance matrix, if you'll forgive the use of a slightly conspiratorial term, very young people whose parents refused to share images of them on social media could potentially protect their anonymity.

Breaking the algorithm: A better form of facial camouflage

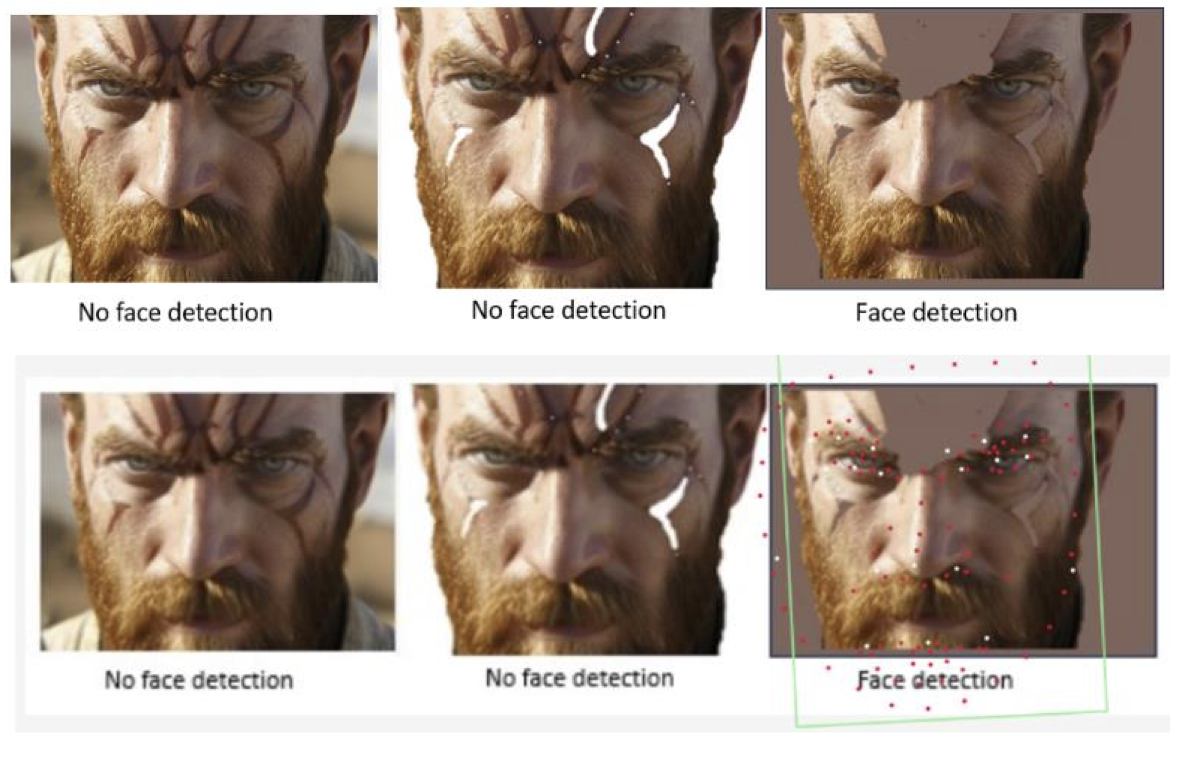

The team found that minimal targeted modifications to a picture file and a cosmetic technique broke the algorithms in commercial and open-source facial recognition models.

They combined subtle cosmetic techniques with an attack targeting alpha transparency layers (hidden or partially transparent pixels) in an image file. These tiny interventions are relatively undetectable to humans - yet prevent machines from identifying a person.

"Faces remain visible to human observers but disappear in machine-readable RGB layers, rendering them unidentifiable during reverse image searches," the team explained. "The results highlight the potential for creating scalable, low-visibility facial obfuscation strategies that balance effectiveness and subtlety, opening pathways for defeating surveillance while maintaining plausible anonymity."

This paper suggests that "nefarious uses" of its facial camouflage techinque could be used to conceal a crime or carry out "scouting, surveilling, or stalking of a target" in a world in which CCTV can identify people both individually and in a crowd.

Beneficial uses include helping "civil libertarians" dodge identification systems, enabling countries to impose anti-surveillance laws, protecting people from doxing and helping to ensure the right to freedom of anonymous assembly is not contravened.

This capability is particularly important in "nations that otherwise restrict or punish public demonstrators," the researchers wrote.

How to dodge facial recognition systems and protect your anonymity

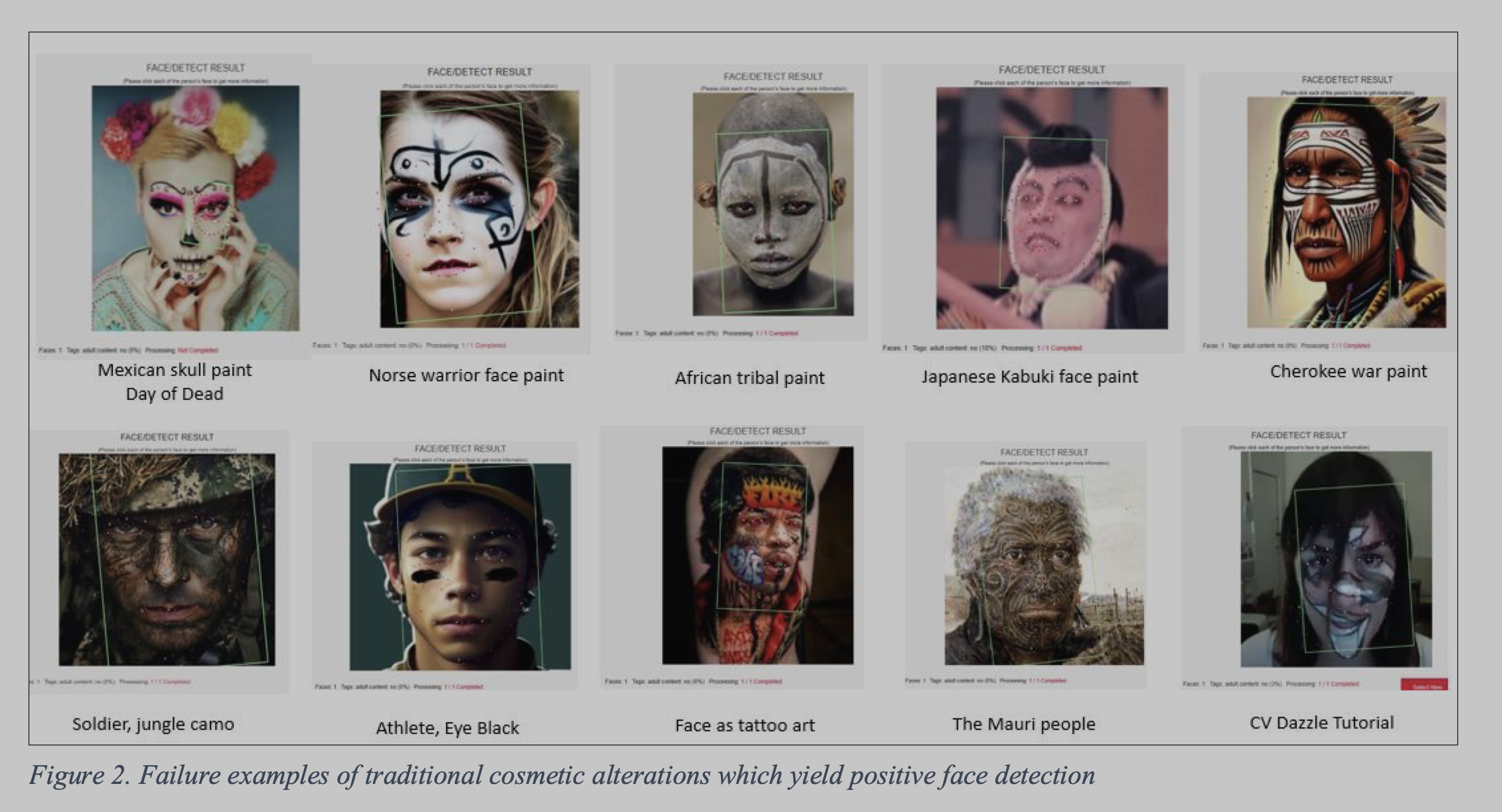

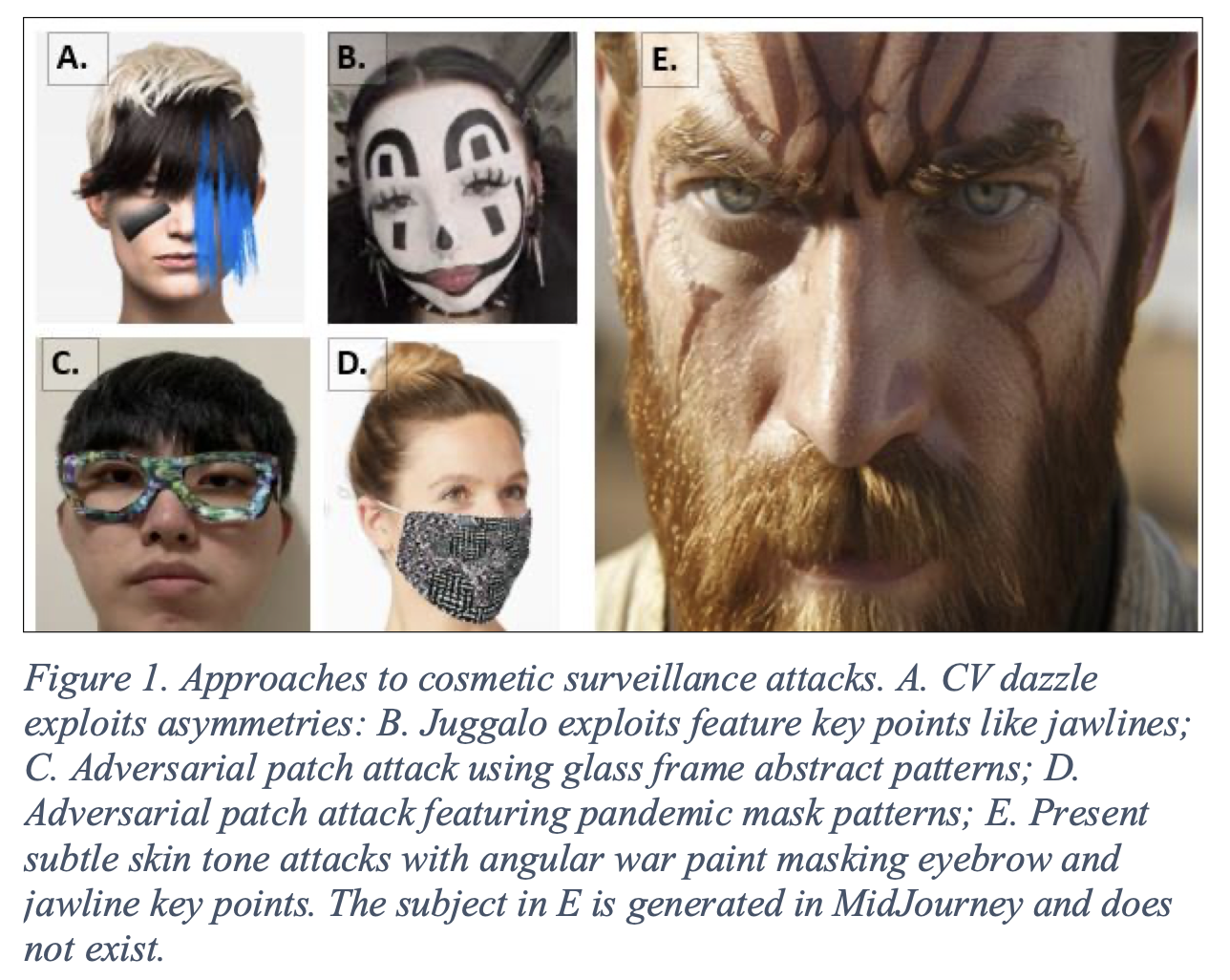

Peopletec's technique involves making small "cosmetic perturbations" to the face which are much subtler than previous techniques you can see in the graphic above.

Common disguises also include "Juggalo” makeup, which is named after fans of the heavily made-up hip-hop group Insane Clown Posse, which painted their faces a bit like Kiss or Alice Cooper historically.

However, many traditional forms of disguising identity actually end up making faces more identifiable by accentuating “key-point” regions such as the brow, jawline and nose bridge, which algorithms rely on to identify people.

Simply darkening these regions is the first step to staying anonymous, Peopletec said. This can be achieved using picture editing or real-life make up.

In the study, the defence firm used the GenAI picture-generating model MidJourney to create a face resembling Obi-Wan Kenobi from Star Wars: Revenge of the Sith, which was then gently edited.

"Effective disruption of facial recognition can be achieved through subtle darkening of high-density key-point regions such as brow lines, nose bridge, and jaw contours," it wrote.

The second step is to edit the machine-visible (RGB) layer so it's unidentifiable, whilst leaving the alpha layer that's visible to humans look relatively untouched and therefore recognisable.

By being undetectable, the camouflage technique mitigates the "Streisand Effect" of putting on a disguise, which the researchers neatly described as a situation in which "obvious efforts to hide one's identity paradoxically draw attention."

This is named after an incident in which Barbra Streisand tried to sue a man who included pictures of her house in an aerial photographic survey of the California Coastline made of 22,000 pictures taken from Oregon to Mexico.

The case drew so much attention that the pic went viral and was viewed by hundreds of thousands of people.

"Unlike previous work, which either relied on overtly theatrical disguises or failed against modern detectors, this study provides a novel and potentially scalable framework that combines targeted facial perturbations, multi-layered transparency attacks, and generative AI to disrupt machine vision while maintaining human plausibility," the researchers continued.

"By demonstrating successful obfuscation against commercial detection systems and vision-language transformers, this work not only enhances privacy protections but also opens pathways for further research into adversarial camouflage techniques that balance functionality, subtlety, and practical utility."

One limitation of the technique was that it was primarily tested on static images, not on multi-frame video where movement helps detection. It also relies on older or select commercial algorithms; advanced deep-learning detectors may resist these tactics.

"Finally, subtle cosmetic modifications, while less attention-grabbing than traditional disguises, may still invite scrutiny in certain social contexts, especially where facial irregularities could stand out due to cultural or legal norms," Peopletech wrote. "Perhaps a crowd event or sports stadium invites dramatic theatrical modifications without drawing attention but still offer relative obscurityto the wearer from camera surveillance or crowd-counting software.

"These limitations highlight the need for ongoing experimentation across diverse models, environments, and real-world applications to further validate and generalize the findings."

Have you got a story to share? Get in touch and let us know.