GenAI vs the human brain: Can ChatGPT really think and reason?

"This is a critical warning for the use of AI in important decision-making areas."

AI is great at copying the patterns found in human creativity. But does that mean it can "think" like a human brain, or is something else going on?

A new study from the University of Amsterdam and the Santa Fe Institute has raised serious questions about the cognitive abilities of GPT models.

The study set out to explore whether AI understands abstract concepts and is doing more than simply mimicking patterns.

Researchers found that GenAI performs well on tasks involving analogies but "falls short" when the problems are ever-so-slightly changed - a finding which highlights a "key weakness" in the reasoning capabilities of artificial intelligence.

They tested models using analogical reasoning, which involves drawing comparisons between two different objects based on their similarities. The researchers described this as "one of the most common methods by which human beings try to understand the world and make decisions."

An example of analogical reasoning is a simple puzzle like: "Bird is to nest as bee is to X."

The answer is, of course, "hive".

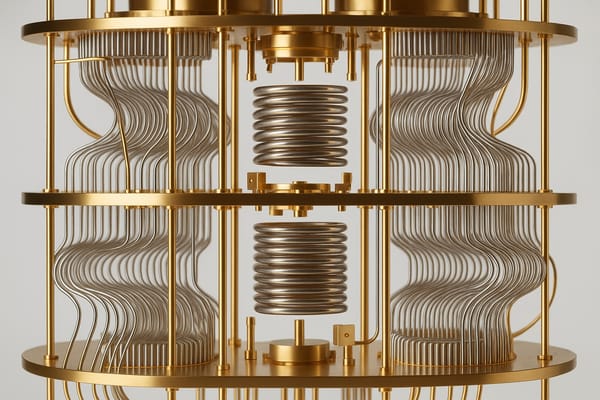

Smart devices: The cognitive abilities of large language models (LLMs)

Large language models like GPT-4 have smashed benchmarks and performed well on a range of tests, including those requiring analogical reasoning. The academics wanted to find out if they "truly engage in general, robust reasoning" or "over-rely on patterns from their training data".

"‘This is crucial, as AI is increasingly used for decision-making and problem-solving in the real world," said Martha Lewis of the Institute for Logic, Language and Computation at the University of Amsterdam.

Lewis and Melanie Mitchell from the Santa Fe Institute compared the performance of humans and GPT models on three different types of analogy problems:

- Letter sequences: Identify patterns in letter sequences and complete them correctly.

- Digit matrices: Analyse number patterns and determine the missing numbers.

- Story analogies: Understand which of two stories best corresponds to a given example story.

"A system that truly understands analogies should maintain high performance even on variations," the researchers said.

"AI models reason less flexibly than humans"

In addition to testing whether GPT models could solve the original problems, the study examined how well they performed when the problems were subtly modified.

"A system that truly understands analogies should maintain high performance even on these variations," the authors wrote.

Humans achieved high performance on most modified versions of the problems. GPT models performed well on standard analogy problems but struggled with variations.

‘This suggests that AI models often reason less flexibly than humans and their reasoning is less about true abstract understanding and more about pattern matching," Lewis explained.

In digit matrices, GPT models exhibited a "significant drop in performance"when the position of the missing number was changed.

Humans had no difficulty with this small challenge.

READ MORE: Rogue agents: Securing the foundation of enterprise GenAI

When a number of story analogies were shown, GPT -4 tended to select the first given answer as correct more often. Humans were not influenced by the order of answers.

Additionally, GPT-4 struggled far more than humans when key elements of a story were reworded, suggesting a reliance on "surface-level similarities" rather than deeper causal reasoning.

On simpler analogy tasks, GPT models showed a decline in performance decline when tested on modified versions, while humans remained consistent. Both humans and AI struggled with more complex analogical reasoning tasks.

This research challenges the widespread assumption that AI models like GPT-4 can reason in the same way humans do.

"While AI models demonstrate impressive capabilities, this does not mean they truly understand what they are doing," the two authors concluded.

"‘Their ability to generalize across variations is still significantly weaker than human cognition. GPT models often rely on superficial patterns rather than deep comprehension."

They also issued the following cautionary note.

"This is a critical warning for the use of AI in important decision-making areas such as education, law, and healthcare. AI can be a powerful tool, but it is not yet a replacement for human thinking and reasoning."

Have you got a story or insights to share? Get in touch and let us know.