Cyber shadows: A new name for an emerging AI ecosystem threat

GenAI is amplifying threats and exposing hidden dangers, increasing organisational and systemic risk.

It's always pleasing to hear a new word or phrase introduced to the lexicon. Earlier this week, we heard about the genesis of the Claude Boys - an unfortunately fake group of AI-obsessed teens guided by a very memorable maxim.

Now we're glad to see another attempt to insert a concept into the collective subconscious of the tech world in an interesting paper about "cyber shadows" by a University of Oxford economist and a Senior IT Partner at Siemens Financial Services.

This new phrase describes threats that AI directly creates, as well as those it causes through second-order effects. Essentially, it refers to the dark shadow that AI casts across the ecosystem.

The researchers explained: "The digital age, driven by the AI revolution, brings significant opportunities but also conceals security threats, which we refer to as cyber shadows. These threats pose risks at individual, organizational, and societal levels."

"Cyber shadows refer to the hidden or amplified security threats that emerge within digital ecosystems due to the use of advanced AI technologies," they continued. "These threats may be direct, as seen in AI-driven attacks that enhance traditional cyber threats, or indirect, that impact the wider digital ecosystem."

The paper calls these system-wide threats "negative externalities”, a term taken from economics and used in this context to mean: "Unintended and often widespread consequences that affect third parties or the system at large, such as data breaches or the erosion of trust in digital interactions."

"In the context of generative AI, these externalities manifest as broader impacts on organizations and societies, such as data breaches at the firm level, where the sophistication of AI-driven attacks leads to increased costs, potential reputational damage, or the erosion of trust in digital systems, which weakens confidence in technology due to the pervasive use of AI-generated content and misinformation," the researchers explained.

"Native AI threat amplification"

We know that critical sectors such as energy, healthcare and financial services are vulnerable to cyberattacks, which can cause cascading disruption and failures across the financial system and the superstructure of civilisation.

Cyber shadows amplify the risk posed by traditional threats as well as creating new dangers.

The paper said direct cyber shadows in AI systems are caused by "off the shelf" characteristics and capabilities of LLMs rather than their misuse. They include:

- Automated code creation: This threat is created by poorly written and insecure code churned out by AI models. "The integration of AI into critical infrastructure without comprehensive security measures could lead to new attack vectors, potentially destabilizing essential services," the researchers wrote.

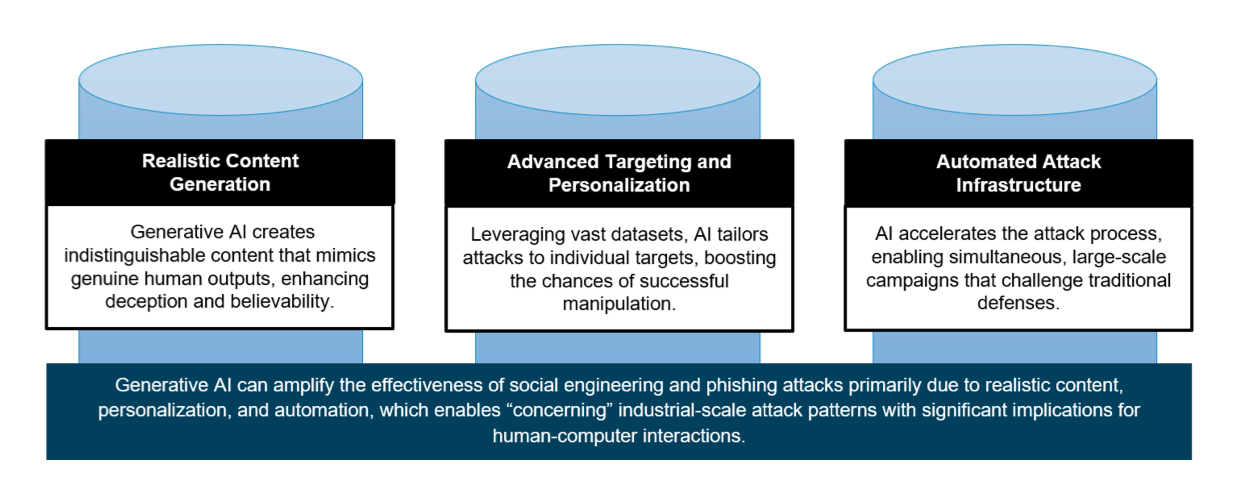

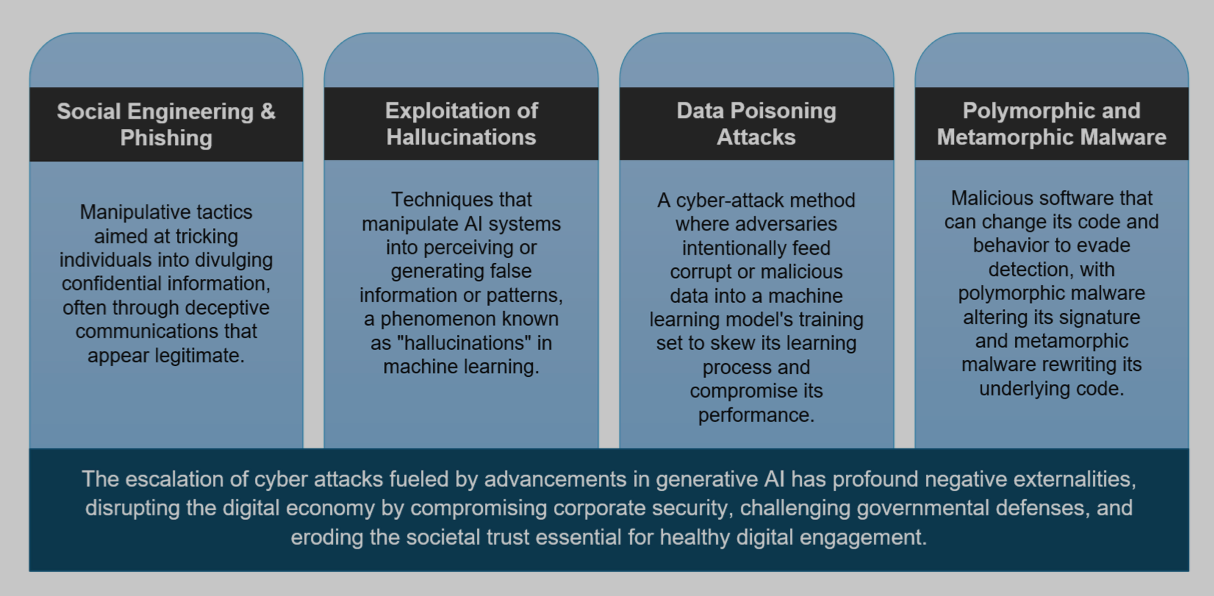

- Phishing and social engineering: GenAI can generate and personalise content at scale. In the future, AI botnets will be capable of autonomously targeting vast numbers of human victims simultaneously by learning and exploiting individuals' weaknesses and then using this information to manipulate groups more effectively.

- Exploitation of hallucinations: "As code-assistants and chat-bots often generate responses for URLs that do not exist in reality, attackers can leverage these responses and host their malicious code in these links," the researchers wrote. "Once a user receives these responses from the AI assistant, they will download the malicious code."

- Data poisoning: The deliberate manipulation of training data to compromise the performance or behaviour of machine learning models. In data poisoning attacks, an adversary introduces malicious or misleading data into the dataset used to train the model, aiming to influence its outputs or decisions. This can cause models to produce incorrect predictions, exhibit biased behaviour, or even create vulnerabilities that attackers can exploit.

- Polymorphic and metamorphic metalware: Two sophisticated forms of malicious software designed to evade detection. "Polymorphic malware changes its code or signature patterns with each iteration, making it challenging for traditional signature-based detection methods to identify them consistently. In contrast, metamorphic malware goes a step further by not only changing its appearance but also altering its underlying code, essentially rewriting itself completely. This makes metamorphic malware even more elusive as it can vary its behaviour and structure between infections."

One of the main direct threats of GenAI lies in its commoditization of social engineering and the capabilities it grants criminals, who can now easily launch phishing attacks, produce realistic forgeries, or automate the generation of malicious code at a scale and sophistication that was previously unimaginable.

The individual and collaborative capabilities of GenAI models will continue to grow, perhaps exponentially. The researchers warned that AI-enabled agents could be used to automate most and possibly all of the kill chain of cyber attacks - learning from each attack as they go to iteratively improve their success rate.

LLMs can be compromised through jailbreaking, prompt injection, reverse psychology and other techniques designed to manipulate a model into breaking its conditioning, the authors pointed out.

There are also "serious flaws" in the trust users have when interacting LLMs, which can be "easily distracted" by irrelevant material, limiting both performance and consistency.

The paper continued: "Artificial agents have traditionally been trained to maximize reward, which may incentivize power-seeking and deception, analogous to how next-token prediction in language models (LMs) may incentivize toxicity. This often leads to harmful and anti-social behaviour that needs to be mitigated during training."

Casting light into the cyber shadows

To defeat cyber shadows, authors put forward an approach that combines technology with "targeted policy interventions".

They recommended focusing on developing capabilities such as"AI-driven threat (shadow) hunting" using automated Intrusion Detection Systems (IDS) or making small changes to pictures called "adversarial perturbations" that make them less vulnerable to being maliciously altered.

The academics also called for carefully crafted regulations that deliver control and protection without stifling innovation.

This combination of tech and regulatory measures can create a "multilevel defence capable of addressing both direct threats and indirect negative externalities", they said.

Their suggested approach is somewhat reminiscent of Open Banking, a relatively new approach to finance that often requires banks to deploy APIs and infrastructure to enable the sharing of account data and regulators to encourage (or force) them into deploying the tech.

"This paper contributes to the ongoing efforts to build a safer digital world by addressing the growing cybersecurity challenges posed by artificial intelligence," the researchers wrote. "A strategy that combines AI-driven security technologies with policy measures [can] protect individuals, businesses, and society from evolving cyber threats.

"Our work aims to not only neutralize these threats but also safeguard essential values like privacy, fairness, and security in the digital economy. The ultimate goal is to foster secure and resilient digital ecosystems that can adapt to the rapidly changing landscape of AI-driven cyberattacks."

The paper was written by Dr. Marc Schmitt, Senior IT Partner for Strategic Development at Siemens Financial Services, and Pantelis Koutroumpis, Lead Economist and Director of the Programme of Technological and Economic Change at the Oxford Martin School.

So will the phrase cyber shadows catch on? Let's see. The threat certainly isn't going away, so it deserves a good name to remember it by.

Personally, I'd like a threat to an ecosystem to be named after nature. AI blight perhaps. Threat amplification is another good one - being sure to keep AI from the beginning of that phrase to avoid creating the acronym AITA (Am I the Asshole?).

But I get where the authors are going with cyber shadows.

Contact jasper@machine.news to tell us about your big ideas/ new concepts and hear about how our words can help achieve your business goals.

Have you got a story or insights to share? Get in touch and let us know.