Big ideas for 2025: AI will put more pressure on security teams, nation-states and the planet

An assessment of how artificial intelligence will reshape the threat landscape over the next 12 months, from multi-agent AI systems to a growing regulatory burden.

In the latest edition of our Big Ideas for 2025 series, Dan Karpati, VP of AI Technologies at Check Point Software, discusses how a surge in AI usage will have far-reaching implications for energy consumption and software, requiring a reappraisal of development practices and ethical and legal frameworks.

"This year will be pivotal for AI and cyber security. While AI offers unprecedented opportunities for advancement, it also presents significant challenges. Close cooperation between the commercial sector, software and security vendors, governments, and law enforcement will be needed to ensure this powerful, quickly developing technology doesn’t damage digital trust or our physical environment. As cyber security professionals, we must stay ahead of these developments, adapting our strategies to harness the power of AI while mitigating its risks.

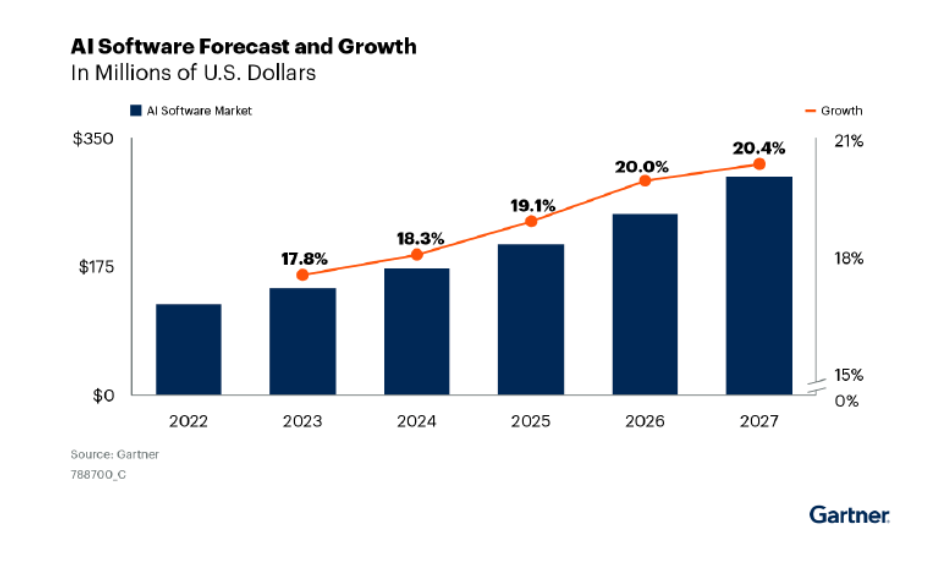

Accelerating AI adoption

"The adoption of AI technologies is accelerating at an unprecedented rate. ChatGPT, for instance, reached 100 million users just 60 days after its launch and now boasts over 3 billion monthly visits. This explosive growth is not limited to ChatGPT; other AI models like Claude, Gemini, and Midjourney are also seeing widespread adoption. By the end of 2024, 92% of Fortune 500 companies had integrated generative AI into their workflows, according to the Financial Times.

Dimension Market Research also predicts that by 2033, the global large language model market will reach $140.8 billion. AI technologies require enormous amounts of computing resources, so the rapid adoption of these technologies is already driving a huge increase in land, water, and energy required to support them. The magnitude of the AI-driven strain on natural resources will be felt in 2025.

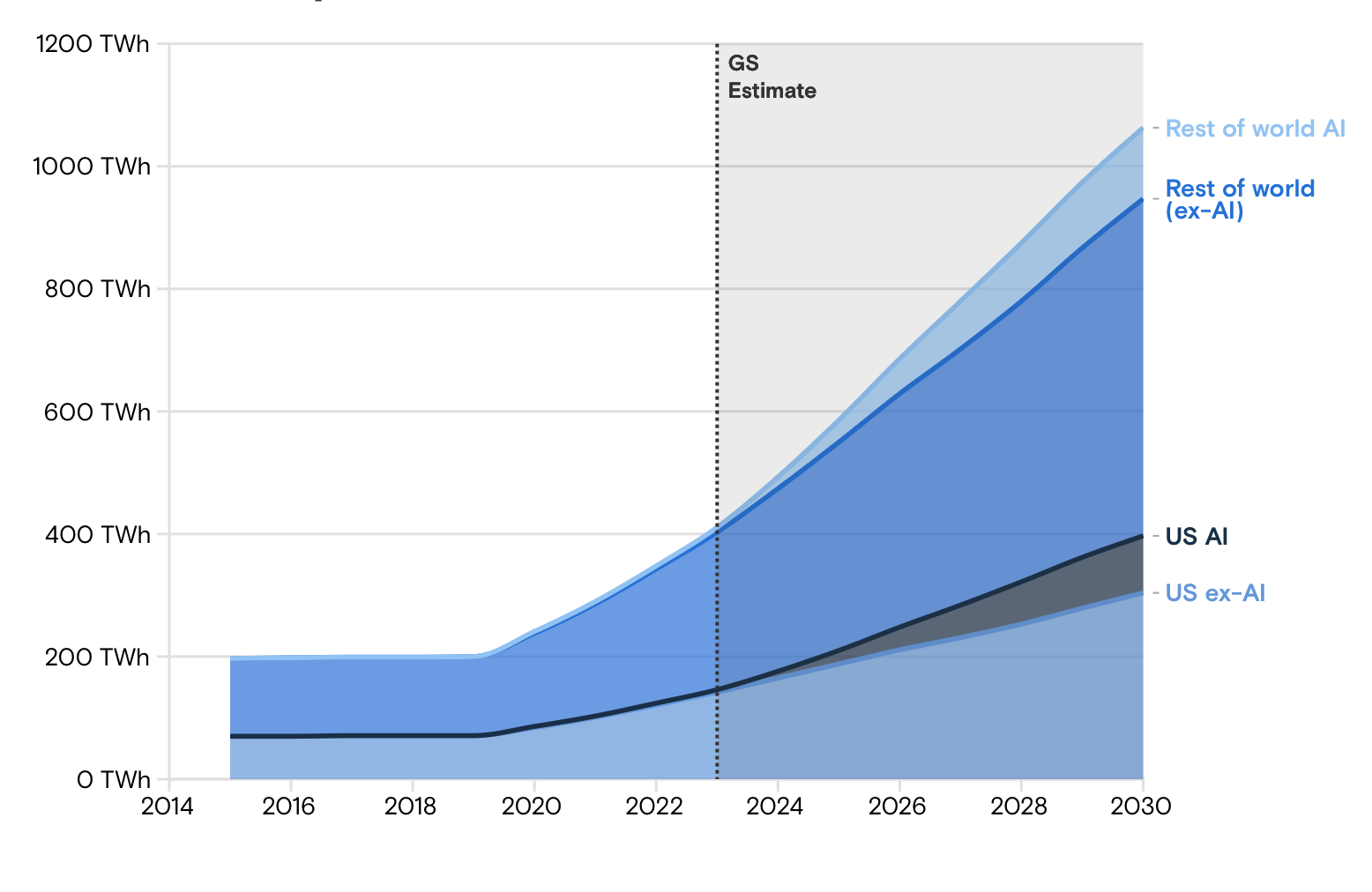

Increasing energy demands

"The proliferation of AI is putting immense strain on global energy resources. Data centres, the backbone of AI operations, are multiplying rapidly. Data centres require land, energy, and water; three precious natural resources that are already strained without the added demands of erupting AI use. According to McKinsey, their numbers doubled from 3,500 in 2015 to 7,000 in 2024. Deloitte projects energy consumption by these centres will skyrocket from 508 TWh in 2024 to a staggering 1580 TWh by 2034 – equivalent to India’s entire annual energy consumption.

Unsustainable growth?

"Experts such as Deloitte have sounded a warning bell that the current system is quickly becoming unsustainable. Goldman Sachs Research estimates that data centre power demand will grow 160% by 2030, with AI representing about 19% of data centre power demand by 2028[11]. This unprecedented demand for energy necessitates a shift towards more sustainable power sources and innovative cooling solutions for high-density AI workloads. Though much innovation has been achieved in terms of harvesting alternative energy sources, nuclear energy will most likely be tapped to power an AI-fuelled rise in energy consumption.

"However, compute technology itself will also become more efficient with advancements in chip design and workload planning. Since AI workloads often involve massive data transfers, innovations like compute-in-memory (CIM) architectures that significantly reduce the energy required to move data between memory and processors will become essential. Random-access memory (RAM) chips integrate processing within the memory itself to eliminate the separation between compute and memory units to improve efficiency. These and other disruptive technologies will emerge to help manage the growing computational needs in an energy efficient manner.

Responsible AI will transform software development

"AI is set to revolutionise software programming. We are moving beyond simple code completion tools like GitHub Copilot to full code creation platforms such as CursorAI and Replit.com. While this advancement promises increased productivity, it also poses significant security risks. AI gives any cyber criminal the ability to generate complete malware from a single prompt, ushering in a new era of cyber threats. The barrier for entry for cybercriminals will disappear as they become more sophisticated and widespread, making our digital world far less secure.

"This threat will fuel the area of reponsible AI. Responsible AI refers to the practice of AI vendors building guardrails to prevent weaponisation or harmful use of their large language models (LLMs). Software developers and cyber security vendors should team up to achieve responsible AI. Security vendors have deep expertise in understanding the attacker’s mindset to simulate and predict new attack techniques. Penetration testers, ‘white hat’ hackers, and ‘red teams’ are dedicated to finding ways software can be exploited before hackers do, so vulnerabilities can be secured proactively. New responsible AI partnerships between software and cyber security vendors are needed to test LLM models for possible weaponisation.

The rise of multi-agent AI

In 2025, we will see the emergence of multi-agent AI systems in both cyber-attacks and defence. AI agents are the next phase of evolution for AI copilots. They are autonomous systems that make decisions, execute tasks, and interact with environments, often acting on behalf of humans. They operate with minimal human intervention, communicate with entities, including other AI agents, databases, sensors, APIs, applications, websites, and emails, and adapt based on feedback.

"Attackers use them for coordinated, hard-to-detect attacks, while defenders employ them for enhanced threat detection, response, and real-time collaboration across networks and devices. Multi-agent AI systems can defend against multiple simultaneous attacks by working together collaboratively to share real-time threat intelligence and coordinate defensive actions to identify and mitigate attacks more effectively.

Ethical and regulatory challenges

"As AI becomes more pervasive, we will face increasing ethical and regulatory challenges. Two important laws are already set to go into effect in 2025. The EU’s AI Act will kick in on February 2, 2025, with additional provisions rolling out on August 2nd. In the US, a new AI regulatory framework is expected to be implemented through the National Defense Authorization Act (NDAA) and other legislative measures, with key provisions beginning in early 2025. These new laws will force enterprises to exert more control over their AI implementations, and new AI governance platforms will emerge to help them build trust, transparency, and ethics into AI models.

In 2025, we can expect a surge in industry-specific AI assurance frameworks to validate AI’s reliability, bias mitigation, and security. These platforms will enhance the transparency of AI-generated outputs, prevent harmful or biased results, and foster confidence in AI-driven cyber security tools.

Conclusion: a big year for AI and security teams

The year 2025 promises to be pivotal for AI and cyber security. While AI offers unprecedented opportunities for advancement, it also presents significant challenges. Close cooperation between the commercial sector, software and security vendors, governments, and law enforcement will be needed to ensure this powerful, quickly developing technology doesn’t damage digital trust or our physical environment. As cyber security professionals, we must stay ahead of these developments, adapting our strategies to harness the power of AI while mitigating its risks.

Have you got a story or insights to share? Get in touch and let us know.