Behind the five-star-facade: How AI is making product reviews totally unreliable

Study finds that GenAI testimonials are hyper-positive and totally convincing, putting humans at risk of manipulation.

Online reviews are becoming a shockingly useless way of gauging the quality of a product due to the proliferation of super-positive AI-generated nonsense.

Now it's been revealed that many consumers cannot even tell the difference between reviews written by actual humans and those generated by AI.

Researchers have found that AI reviews are much more likely to be glowing endorsements than those written by humans, but they are often less detailed and packed with false information.

A study by Yingting Wen, a Professor of Marketing at Emlyon Business School, and Sandra Laporte, a Professor of Marketing at Toulouse School of Management, set out to examine whether ChatGPT and other GenAI tools could create narratives that resonate with shoppers as effectively as those written by humans.

They found that human-written content was more genuine and less positive than AI-generated content. However, most consumers could not tell when bots were lurking behind the five-star facade - leaving them, we extrapolate, at greater risk of manipulation.

The researchers wrote: "Automatic text analysis reveals that while reviews generated by ChatGPT 3.5 exhibit lower levels of embodied cognition and lexical diversity compared with reviews by human experts, they display more positive affect.

"However, human raters struggle to notice these differences, rating half of the selected reviews from AI higher in embodied cognition and usefulness. For social media posts, the more sophisticated ChatGPT 4 model demonstrates superior perceived lexical diversity and leads to higher purchase intentions in unbranded content compared with human copywriters."

In other words, the latest AI models were more effective than humans and consumers couldn't tell the difference anyway.

“As generative AI tools like ChatGPT are increasingly used in marketing, they help automate tasks like crafting social media posts and responding to customer comments, resulting in higher engagement and increased purchase intent," said Professor Wen.

“However, research shows that while AI-generated content can be effective, it still lacks the nuanced understanding and authentic voice that human creators bring to marketing, therefore human input is still needed in the process."

Can you trust online reviews in the age of AI?

Not if they are positive. Humans are known to be more likely to leave negative reviews than wax lyrical about a product they've loved, so you can trust bad reviews. When you see those five stars shining, it's time to ask deeper questions.

Researchers from DoubleVerify found there were three times as many AI-powered fake app reviews in 2024 compared to the same period in 2023.

"Fake reviews often serve to artificially inflate an app’s credibility, persuading users to install them," it wrote. "For instance, we’ve identified apps with thousands of five-star ratings, where many are convincingly crafted by AI. While some fake reviews are subtly deceptive, others are more easily identified by telltale phrases typical of generative AI tools, such as 'I’m sorry, but as an AI language model…'"

These "mechanisms of deception" pose a major risk to consumers, who may be tricked into downloading malware-laced applications.

"Users who download these apps often find themselves bombarded with an overwhelming number of out-of-context ads, akin to websites created solely to display advertisements," DoubleVerify wrote. "This approach disrupts the user experience and diminishes the app’s long-term viability as frustrated users eventually uninstall these deceptive apps. But even after users uninstall the apps, they still display traffic using falsification schemes."

What's being done to combat fake reviews?

In August, The Federal Trade Commission announced a final rule that will prohibiting their sale or purchase of false testimonials and allow the agency to seek civil penalties against "knowing violators".

“Fake reviews not only waste people’s time and money, but also pollute the marketplace and divert business away from honest competitors,” said FTC Chair Lina M. Khan. “By strengthening the FTC’s toolkit to fight deceptive advertising, the final rule will protect Americans from getting cheated, put businesses that unlawfully game the system on notice, and promote markets that are fair, honest, and competitive.”

How to spot fake AI reviews

- Uniform syntax and style across different users: A lack of variation in writing style is a strong red flag. Fake reviews often share a uniform tone, sentence structure, or phrasing, even when supposedly written by different users. For instance, all reviews may have overly polished grammar or a repetitive way of expressing enthusiasm.

- Unusual formatting: Keep an eye out for peculiar formatting choices, such as every word being capitalised in a sentence like: “This Product Is Amazing”. This could indicate a generated template rather than authentic user input.

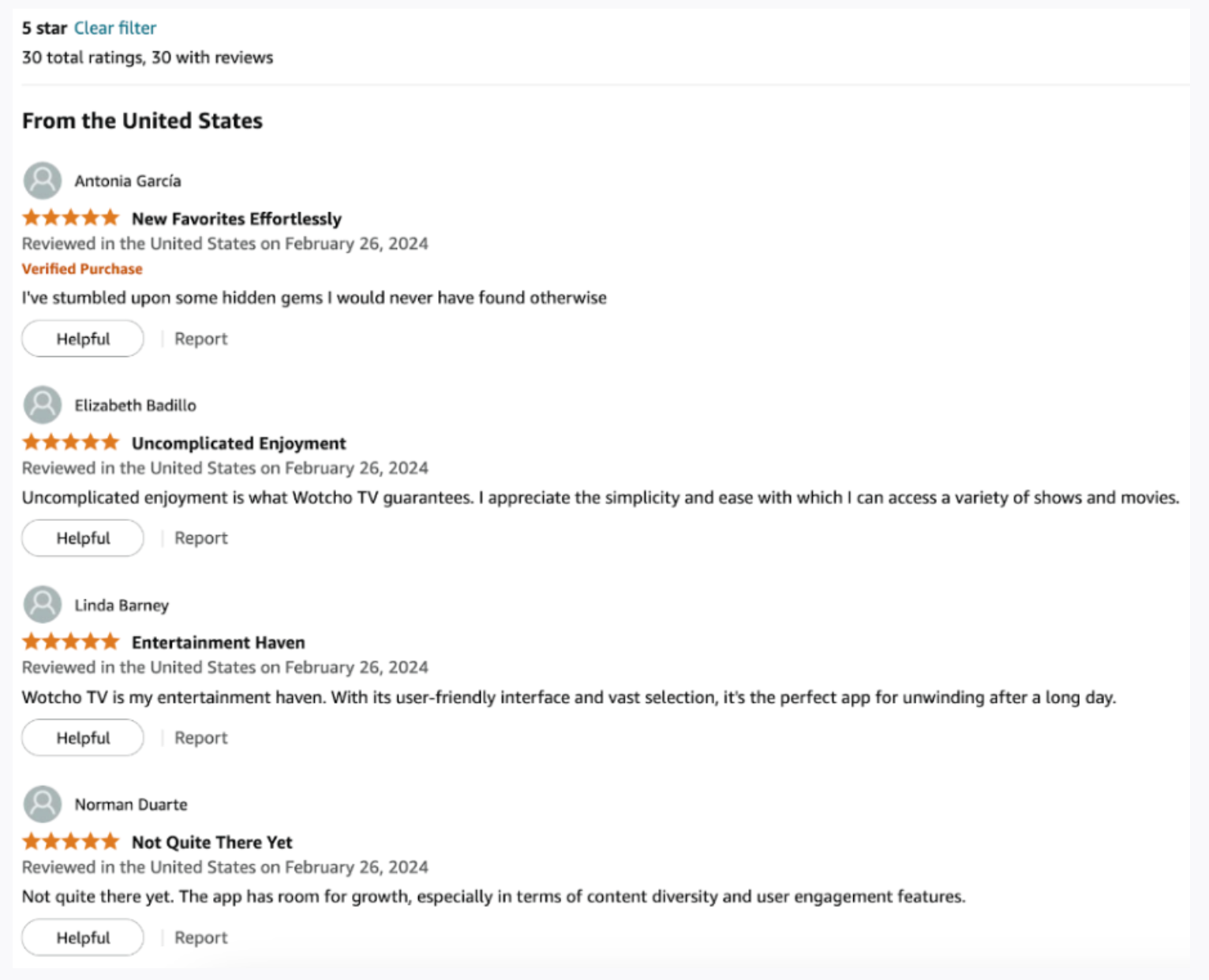

- Suspiciously positive ratings despite critical comments: Fake reviews might give consistently five-star ratings, even when the content of the review includes minor complaints or criticisms, such as “not quite there yet.” This inconsistency between rating and commentary can indicate manipulation.

- Overuse of written reviews: Genuine users often leave ratings without a written review, as it’s not typically required. If every single rating is accompanied by a detailed write-up, especially in ecosystems where this isn’t the norm, it may suggest an automated approach.

- Activity exclusively within one ecosystem: Fake reviewers often have no activity outside a single platform or app. For example, they might have accounts with no history of engagement elsewhere, focusing solely on boosting one product or service.

- Generic and vague language: AI-generated reviews often avoid specifics, using generic phrases like “excellent service,” “highly recommend,” or “works great.” Real reviews tend to include personalised details about the user’s experience.

- Excessive use of buzzwords: Reviews may be packed with marketing language, like “life-changing,” “unparalleled,” or “innovative,” with little to no concrete evidence or examples to back up the claims.

- Strange timing clusters: Look out for an unusual number of reviews posted in a short time frame. Fake review campaigns often occur in bursts to quickly inflate ratings.

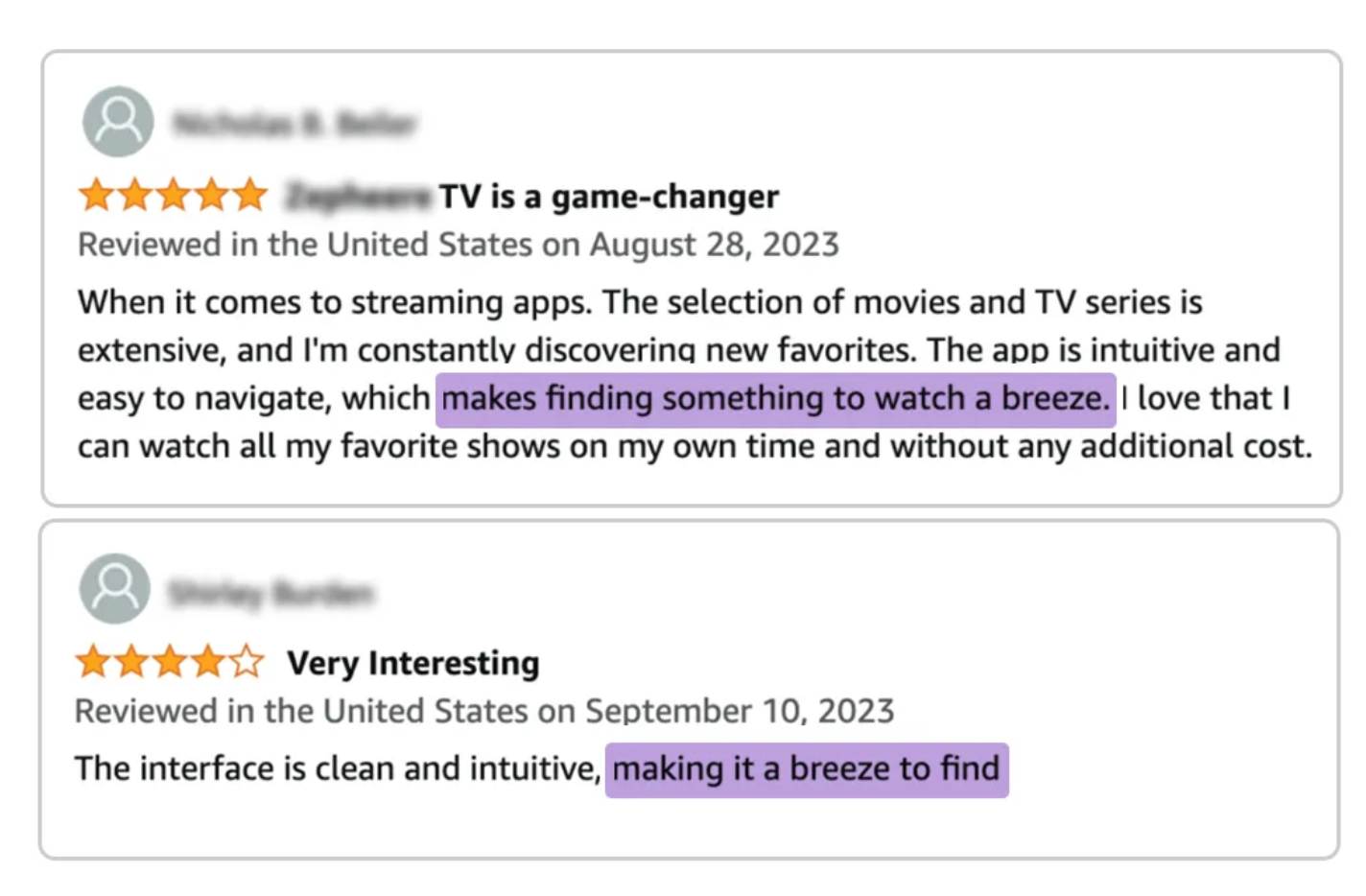

- Repetitive keywords or phrases: AI reviews often repeat the same keywords or phrases, such as the product’s name or key features, to maximise SEO impact. This repetition can make the reviews feel robotic or unnatural.

- Lack of emotional nuance: Human reviewers express a range of emotions and subjective experiences. AI reviews tend to sound either overly neutral or unnaturally enthusiastic, without much emotional depth.

- Lack of mistakes: Humans often misspell words or use dodgy grammar (see the human-made posts here on Machine for evidence of this unfortunate reality). AI will produce close-to-perfect text.

Have you got a story to share? Get in touch and let us know.