2025 AI predictions: AGI, GenAI, pilot purgatory and a plateau of productivity

Find out what's will happen as AI hype turns to action over the next year.

It's easy to get swept up in the hype of AI. Believe the bluster of someone like OpenAI boss Sam Altman, and it may seem like the arrival of artificial general intelligence (AGI) is just months away.

Whether these predictions come to pass or the sceptics are right about the bubble popping, AI will play a central role in many industries throughout the next year.

Here are some predictions about AI in 2025 from the Machine community. We're taking a holiday until the New Year - so hope to see you back here in January 2025. Thanks for your support so far.

It's AI or bust...

Jeff Hollan, Head of Applications and Developer Platform, Snowflake

“Managers will be forced to face the music on implementing AI. As organizations feel increased pressure from their leadership and boards to implement AI, managers will need to learn how to proactively identify areas within their own teams that can be automated, and enable their teams to successfully implement AI to do so.

"Make no mistake: learning to proactively identify these opportunities and adequately enable your team to capitalize on them is a skill that needs to be developed. At the same time, managers must also keep up with their teams’ gains in productivity to stay on top of workloads and avoid becoming a bottleneck.

"For example, if a team becomes 15% more productive with AI, their manager also needs to become 15% more productive to keep up. It’s imperative that managers not only encourage AI adoption across their teams, but also “walk the walk” themselves and keep up with team-wide productivity gains.”

AI breaks out of "pilot purgatory"

Kristof Symons, International CEO of Orange Business

“As AI and generative AI hit peak hype, businesses are asking: where’s the real value? The answer lies in breaking free from 'pilot purgatory' and scaling AI to deliver meaningful outcomes.

“Success starts with full buy-in from the C-suite and employees alike. When leaders champion AI as a strategic priority, it sends a clear signal: we’re all in this together. Transparency and education are key: show employees how AI can simplify their work and drive better results. And don’t make AI a luxury for a select few; democratise it across the organisation to avoid resistance and foster collaboration.

“Quick wins are essential. Start small, show results, and build momentum. But scaling AI requires more than excitement—it demands clean, relevant data, seamless integration, and a focus on security. As we head into 2025, the winners will be those who strip away the hype and deliver real, measurable value. Align AI with business goals, empower employees, and use it to create lasting change.”

The rise of Shadow AI

Cathy Mauzaize, President, EMEA, ServiceNow

“There was a time during the rise of BYOD and rapid digital transformation when IT teams were primarily concerned with the impact of ‘shadow IT’—an unregulated growth in the use of apps and devices outside traditional business controls. This led to fragmented systems, compliance risks, and inefficiencies that required large investments of time and resource to address. As AI transitions from hype to its own ‘iPhone moment’ in mainstream business, the lessons from that not-so-distant era serve as a cautionary tale.

" The deployment of AI introduces both operational risks and broader strategic, reputational, and ethical concerns. Missteps in AI governance—such as algorithmic bias, misuse of data, or poorly defined accountability—can lead to significant regulatory penalties and erode trust with customers, partners, and employees. Amazon’s experiment in AI recruiting which revealed gender bias in the tool’s selection process is just one early example. The complexity and scale of these risks mean they are no longer confined to the CIO’s domain but require direct attention from the CEO and the broader C-suite.

"This shift is not just about managing risks. C-suite engagement can ensure AI delivers its promised value. Effective governance can provide a competitive edge by ensuring responsible innovation, safeguarding brand reputation, and enabling transparency. CEOs must lead the charge in embedding AI ethics and governance into their organization’s culture, setting the tone for accountability and aligning AI initiatives and use cases with broader business strategy.

"In this phase of accelerated AI adoption, the CEO’s involvement in governance is not optional—it’s a business imperative. The companies that recognize this will not only mitigate risks but also position themselves to unlock AI’s full potential responsibly.”

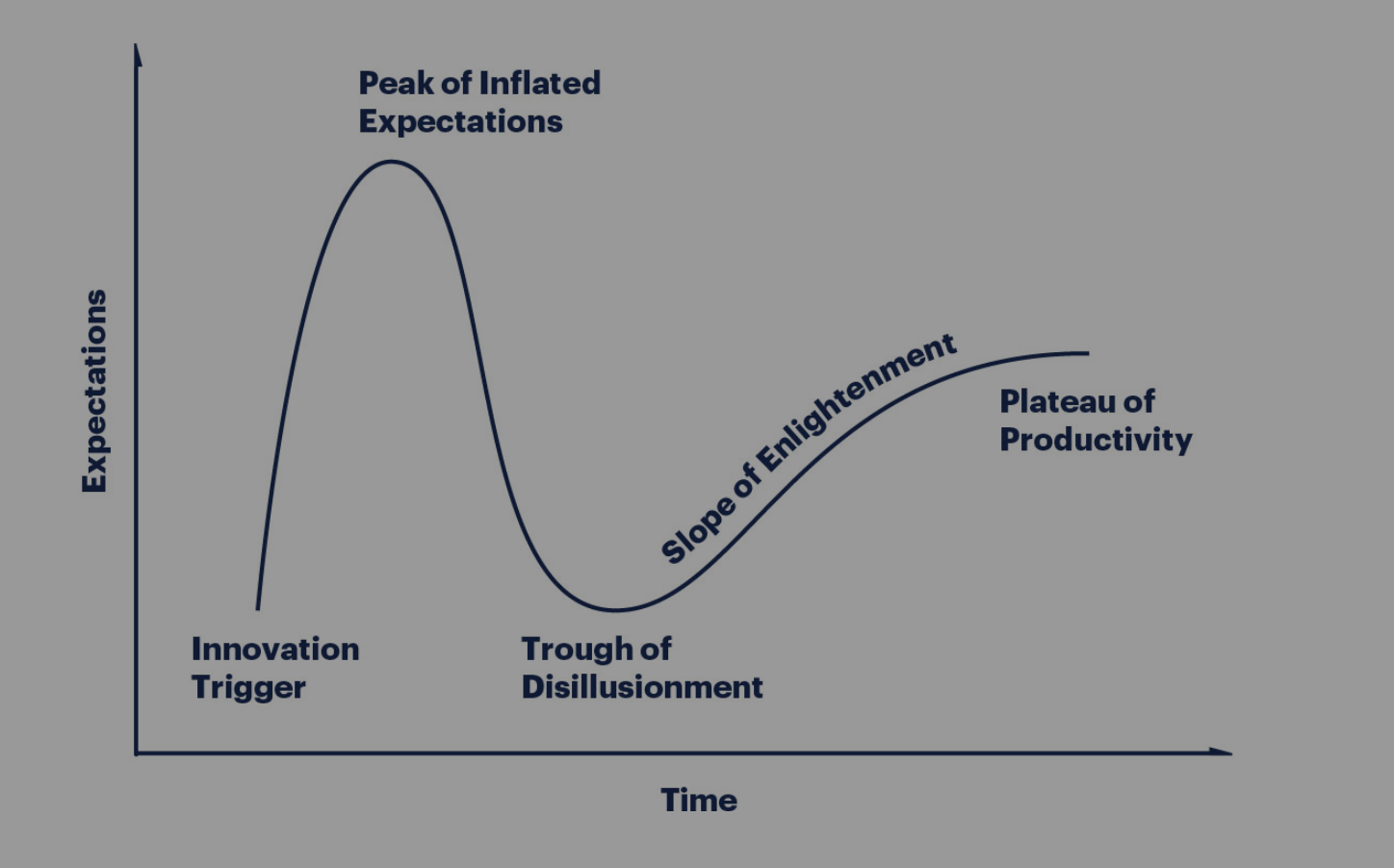

The hype cycle reaches a plateau of productivity

Monish Darda, CTO and Co-Founder, Icertis

“2025 will be the year that you can’t do business without AI. But leaders have grown weary of the hype and are demanding real value from their AI investments. The next 12 months will be defined by the emergence of agentic frameworks that address common GenAI challenges and enable greater value, while the larger AI market continues to be characterised by coexistence – rather than consolidation – among competitors.

|The rapid pace of AI innovation compounded by economic uncertainty may also cause many tech leaders to pull back on system investments out of fear that their enterprise platforms will become redundant. This is a critical error, and the key to harnessing GenAI’s full potential lies not in pausing investments but in accelerating the adoption of SaaS platforms that are AI ready.

"We will no doubt reach the plateau of productivity in AI’s hype cycle faster than any other technology in history. The path forward will also include a second wave of disillusionment as regulation and superficial AI use cases temper expectations. But history proves that failure to invest in digital resiliency leads to missed opportunities. Companies that invest wisely today will stay ahead of the curve in 2025 and beyond.”

Humans carve out a new role in the AI revolution

Mike Donoghue, Co-founder & CEO, Subtext

"By 2025, AI will be an incredible boon for the messaging ecosystem, but only inasmuch as it helps scale human interaction versus faking it. We'll likely see AI applied to help creators, brands, and media companies better understand their audiences by listening at scale. It'll also assist in crafting high-value messaging that resonates. However, some organizations might take the automation concept too far, attempting to use AI to voice messages from celebrities or artists, repeating errors we've seen from Meta which had to be corrected. The real opportunity lies in using AI to enhance our understanding of audience needs and preferences, allowing for more meaningful human-to-human interactions.

"As AI-generated content becomes more mainstream, human expertise and deep domain knowledge will become crucial differentiators. We might see a return to relying on star reporters to build audiences, similar to how media companies operated in the past. The industry may see a trend of bundling multiple niche audiences under one brand, which could be a way forward for some publishers. In a world of AI-generated content, the ability to provide genuine human insight and expertise will set content creators apart. Direct messaging platforms can play a key role here, allowing these experts to deliver their insights personally to their most engaged followers."

For tomorrow's models, small is beautiful

Grant Shipley, Sr. Director, AI, Red Hat

"2025 will be the year when we stop using the number of parameters that models have as a metric to indicate the value of a model. Models will evolve to be smaller in size and built for specific purposes. In turn, developers will begin to chain multiple small models together much like we do with microservices in the software industry to accomplish a set of tasks for their applications."

Europe sets the scene for AI regulation

Matt Riley, Data Protection and Information Security Officer, Sharp Europe and UK

"The EU AI Act will provide quite a bit of transparency and protection for users of AI tools – but we’re unlikely to see any meaningful adherence to it until 2026 when AI providers will essentially be forced to comply. It’s for that reason that 2025 will be the year that people and businesses will be exposed to the most risk with AI tools.

"There are currently no real rules to what happens with the data we provide in exchange for the use of certain AI tools. This is much less of a concern for big players such as Microsoft, which provide structure, security and rigour to their offerings. But that’s not always the case for smaller, fringe AI companies or online service providers that leverage AI. IT leaders need to ensure they’re reading terms and conditions very carefully before approving the use of AI applications for work – or risk exposing their organisations and businesses to unknown entities."

But will the AI Act stifle innovation?

Karl Havard, CCO, Nscale

“The EU’s AI Act is a well-intentioned piece of regulation, stimulating conversations about the ethical use of AI and responsible AI development – but it’s lengthy, hard to enforce and risks putting the EU at a disadvantage. Guidelines that minimise the risks associated with AI are important, but this regulation is arguably stifling innovation through an overly restrictive framework.

“With the global AI ecosystem evolving at pace, companies can’t afford to lose time or dial down innovation. So, looking at 2025, we may see the regulation push key industry players to shift operations to other markets with less stringent regulation. This would be a huge loss for Europe, and detrimental to the vibrant AI industry developing in this region. Preventing this from happening requires that we keep supplying European companies with the AI tools they need to innovate in the future irrespective of the challenges posed by regulation.”

The robots are coming (slowly)

Stefan Weitz, co-founder & CEO, HumanX

"Sure, I’m excited about Tesla’s Optimus robot, but more for its remarkable degrees of freedom and dexterity than its autonomous nature (which isn’t there yet). That said, there will be real improvements driven by simpler robots in 2025 like the ones from Softbank Robotics for things like food delivery and cleaning. The more humanoid robots I predict won’t see any mass scale next year, although work happening at Collaborative Robotics in the US and the frankly bonkers Astribot in China are showing real promise.

"Synthetic virtual people indistinguishable from real humans will enter the workforce, even if in limited ways, leading to debates about employment rights and creating a push for “AI citizenship” to define their societal roles and limitations. Companies like Aaru are pioneering this new take on a “digital twin” by providing companies with the ability to ask questions of every ‘human’ on the planet in seconds rather than relying on traditional polling or panels.

"At least one major, globally recognized company will fail or significantly downsize due to an inability to compete with one or more AI-native startups. Rapid innovation cycles and the horizontal application of AI will render slow movers obsolete."

AI and quantum create new security risks

Brian Spanswick, CIO and CISO at Cohesity

This last year has given a clearer understanding of the security threat created by AI technology. The attack techniques most leveraging AI include brute force and social engineering attacks. In addition to an increased effectiveness of breaching digital assets these AI enabled techniques have greatly reduced the time to breach. The number one variable to reducing the impact of a breach is time to respond and to contain the breach. With the emergence of AI technology cyber security teams will have significantly less time to respond and contain a breach before the impacts of the breach are realised.

"Many of the fears expressed after the arrival of ChatGPT and the presence of AI in everyday life have not materialised like autonomous systems threatening human engagement and AI’s potential to brute force data encryption. This is not meant to downplay the impact that we’ve seen with the emergence of AI, this is meant to illustrate the significantly greater impact we are going to see from the advent of quantum computing. In 2025 organisations need to at least develop a plan to migrate to encryption that isn't vulnerable to quantum computing attacks."

Edging towards AGI (artifical general intelligence)

Debo Dutta, VP of Engineering, AI, Nutanix

We will get closer to AGI in 2025 via reasoning models like OpenAI o1 and its competition from Meta LLama and Alibaba. This will give AI models significantly more capabilities than today.

GenAI leadership is open, small and global. We will see a lot of open permissible models that can do as well, if not better than closed models. We will also see open reasoning models too. In addition, models will also get compressed by learning from bigger/powerful models.

Inference scaling or scaling computation during inference time will dramatically increase the cost of AI inference. Enterprises will need to revisit their infrastructure and power investments due to this new technology trend.

Multi-agent AI or Systems of cooperating Agents will take center stage. This will involve a collection of AI agents working cooperatively and will need new people, processes and technology to enable this in the enterprise. I also anticipate Agents will negotiate with other Agents. AI Inference will also become even more important with the growth of reasoning models and agents.

Cloud robotics will become a reality and enter the enterprise. This will lead to shared services and data infrastructure spending, especially in the Manufacturing sector. The same design pattern will also be true for managing software multiagents in the enterprise.

Have you got a story to share? Get in touch and let us know.